About the Book

Would you hire an unknown singer without hearing them sing? Even with “five years of singing experience” in their résumé and a diploma from “Epic Singers School,” you would still like to hear them, wouldn’t you?

Surprisingly, most companies do the opposite and base hiring decisions on candidate claims and attained degrees. The good news is that you can detect the top employees other companies are missing by applying Evidence-Based Hiring process.

Table of contents

This book is licensed under CC BY-ND (Creative Commons Attribution-NoDerivatives) 4.0 license:

https://creativecommons.org/licenses/by-nd/4.0/legalcode

You are free to:

Share — copy and redistribute the material in any medium or format for any purpose, even commercially.

Under the following terms:

Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

NoDerivatives — If you remix, transform, or build upon the material, you may not distribute the modified material.CHAPTER 1: The Failure of Traditional Employment Screening

The traditional hiring process is broken. It’s a throwback to an industrial era hundreds of years ago, when we didn’t have employment science and relied on our hunches instead of data. If you’re reading this book, you probably feel the same way.

Together, we’re going to change this! Hiring can be made more accurate while requiring less time, resources, and frustration from both employers and candidates. It can be made more transparent, objective, and, perhaps sometimes, even enjoyable.

My First Hire

Many of us make the same mistakes when first hiring. I remember posting my first job ad: I was proud, as it was a sign that my little company was becoming serious. Customers were loving my product and I needed a software developer to help me out. It was obviously going to be the first hire of the many thousands that would follow, putting me on a path to me becoming the new Steve Jobs.

I had carefully crafted a job ad, put a paid listing on a local job site, and went to sleep. The next morning, I jumped out of bed in excitement, skipping across to my computer pumped to see how many hundreds of the world’s most talented people had applied for my job. I did find résumés, not hundreds but several from competent-looking candidates and experts in multiple domains—databases to front-end, XML to algorithms. They had more experience than me, and they’d worked on cool projects at previous companies. I started to expand my future company vision. Was I thinking too small? Maybe I could also do a hostile takeover of Microsoft and Amazon?

As I had more than 20 applicants, I screened the top five résumés. “I will hire only the best,” I said to myself, whilst stroking my beard contemplatively. I invited those five for an interview at the company “headquarters” (my apartment on the fourth floor of an old building, without an elevator).

If you have ever done a job interview for your company, you probably know that it feels like a first date. There’s the nerves, the desire to impress, and a looming sense of the possibility that should things go well. Too nervous to sit down, I kept myself busy vacuuming one last time—the headquarters was spotless.

The first candidate arrived and I sat him down with a glass of orange juice. We talked about his résumé and work experience. I explained what the job was, he confirmed that he was a very good fit for it. Great. We began talking about IT in general. I enjoyed the conversation, so I didn’t want to derail it by asking questions that may break the rapport that we were developing. At the same time, a nagging voice in my head told me that I needed to test the candidate, not just chat with him.

After 45 minutes of chit-chat, I finally got the courage to ask the candidate if we could do some specific screening questions and he agreed. This is an awkward moment in any interview, as you switch from a friendly conversation, to an examination with you as the professor. I began with questions related to the programming framework that the job required. To my surprise, he clammed up, struggling to answer even half the questions adequately. The interview swerved off the rails, crashing into a ravine of my misplaced optimism and his bad bluffing. He was not qualified for this job.

I should have ended the interview then. Somehow, I couldn’t. Fifteen minutes later, I walked him out promising to “let him know if I was interested.” Disappointed, I returned to HQ’s boardroom (my kitchen table). What a disaster. We’d had such a nice chat in the beginning, too.

The next day, another candidate came to interview. I was more confident this time, and shortened the chit-chat to a mere thirty minutes. When I started asking prepared screening questions, his answers were mostly okay. It wasn’t clear if he didn’t understand some things, or merely had trouble expressing them. He agreed that I could send him a short coding test of basic programming skills. To my surprise, the candidate’s solutions were completely wrong and written in awful, amateurish code.

At least he tried to solve the test, though. The third candidate simply replied that he didn’t know how to solve the tasks. The fourth candidate failed the basic screening questions so badly that I didn’t even need to test him.

Just before the fifth candidate’s interview, he wrote that he was no longer interested in the job. I sat in my freshly vacuumed headquarters, looking out the window and thinking about what the hell was wrong with me. Why was I selecting the wrong candidates? I’d picked five from twenty, and they’d all been absolutely wrong for the position. Was I just bad at noticing red flags in résumés? How would I ever build a company? There had to be a better way to do this…

The Bell Curve

When I look back at those days, a decade ago, I can’t help but break out into a smile. My simple mistakes are funny in retrospect, because most first-time interviewers make them. My biggest mistake was not understanding the hiring bell curve.

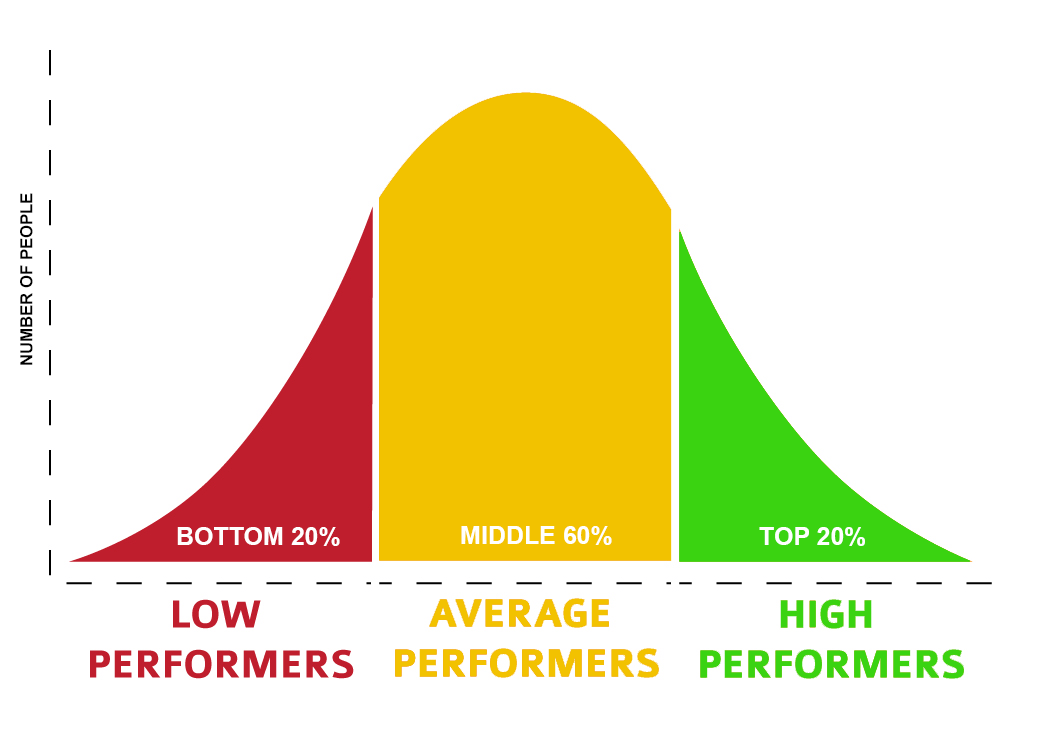

While it is nice to think that everybody’s performance is the same, it isn’t. If you recall math classes in school, probably 20% of people were good at math, 60% got by, and 20% couldn’t solve math problems without help. This distribution is so common in nature that it is called a normal distribution1 (also referred to as a bell curve), see the next figure.

This distribution is used as a basis for scoring in every school, country, and domain of knowledge. While you would think that everybody who finishes medicine or physics is equally good, as those professions attract top performers, when you plot results of medicine or physics students, you also get the bell curve. Yes, they know much more about medicine or physics than the average person, but, inside the domain, there are still enormous differences between top and low performers.

Probably, you feel this about your profession too. When I ask my friends who finished medicine, law, or architecture, none of them have ever said, “Oh, all of us who finished school X are equally good.” If all professionals in a domain look equally good to me, it’s a sure sign that I don’t understand this area very well.

Even worse, the bell curve becomes more selective as we move to knowledge-worker professions (lawyers, accountants, managers, programmers, scientists, etc.), as opposed to physical professions. A great dock worker might be able to unload double the cargo of a bad worker, but they can’t be five times better, because his performance is limited—he’s still stuck with the same two arms and two legs as everyone else. However, for knowledge workers, their domain is their brain, and within their brain is their particular combination of knowledge, competence, motivation, and intellect. Have them apply this to tasks where there are hundreds of possible solutions, you will see the difference between individuals that can be several orders of magnitude. The best programmer might have the usable output of ten average hack coders. Lock one hundred physics graduates into a room for a year, and they won’t have achieved the output of a single Richard Feynman. It’s not fair that achievements are not distributed evenly among people or that we’re not all equal. But, this inequality is a reality that academia and businesses must accept.

When hiring knowledge workers, the goal is always to hire from the top 10%. Unfortunately, the best performers usually have good jobs already. They will rarely search for a new position and are probably tapped up for new jobs all the time. It’s often the case that they don’t even have a résumé, they’ve simply never needed one. Bad performers, however, will have plenty of time on the job market applying for jobs and perfecting their résumés. So, when you publicly post a job ad, most of the applicants will be bad performers. It’s just math. If a great performer finds a job in a month, while a bad performer takes five month, the bad performer will likely apply to five times as many jobs. In that simplified case, to hire someone in the top 10%, you’d need to hire just 1-in-50 applicants!

“We Hire Only the Best”

Why does all this matter? Because of the top 10% fallacy:

The majority of companies say that they only hire the top 10% of talent. But, if that was the case, then 90% of people in any profession would be unemployed.

Math tells us that most employed workers must have an average performance, by definition. The logical conclusion is that most companies are fooling themselves. Realizing this can make you a little paranoid. If you interview a candidate and they come out as knowledgeable and a hard worker, why weren’t they hired by the rival companies that also interviewed them? Why did their previous employer let them go rather than offering them a pay raise to stay? Hiring is a case of asymmetric information—the candidate and their previous employers have much more information than you can extract in a one-hour interview.

I did eventually get better at hiring, although it took a lot of effort and testing. Several times, I’d proudly implement a new hiring process that I was sure would find a ten-percenter. Then, I’d attend a professional conference where, in the break, we’d start talking about our hiring procedures. “We filter out 80% of people based on the résumé,” one said, and everybody nodded in agreement. “But, then, a phone call with a candidate, with a few quick questions, will eliminate another 50%,” said someone else, to another round of nods. “The onsite interview is the most important part,” the conversation continued, “we spend more than 90 minutes discussing previous jobs, education, and motivation and asking specific questions that prove their knowledge. This is how you hire the top talent.” We did a bit more nodding. I was terrified. If we were all doing exactly the same thing, then none of us would get the top talent.

There is a joke about two people running away from a bear. One person asks the other, “Why are we running? The bear is faster than both of us!” His running partner replies, “Well, I don’t need to be faster than the bear. I just need to be faster than you!” A similar approach applies to hiring. Illustratively speaking, if you want to hire the top 10%, then you need to have a better hiring procedure than nine other companies.

Hiring doesn’t get easier if you work in a big company—quite the contrary. If you have a great brand and you can hire people from all over the world, then you will have an insane number of applicants. In 2014, 3 million people applied to Google2, and only 1-in-428 of them were hired. There is no way to screen a population which is the size of Mongolia without resorting to some type of automated screening. But, Google is still a small company. If Walmart had a 1-in-100 selection criteria, they would need to screen the entire working population of the US and still find themselves 25 million people short3. Before I had experience in hiring, I would often get angry with incompetent customer support representatives, delivery staff, or salespeople. How could a company hire them when they’re so incompetent? Now, I know better. I imagine myself being a head of a department hiring 500 people in a new city—for a below-average salary. Large enterprises hire on such a massive scale that they’re more focused on screening out the bottom 20% than chasing the hallowed top ten on the illusive far right of the bell curve.

This might sound like a bad thing, but it’s not, as it means a large majority of society can find employment. Hiring knowledge workers is not hiring on a massive scale. You can screen for the best and give them better benefits than the competition. A great employee that delivers twice as much is well worth a 50% higher salary.

How Can We Fix Employment Screening?

For all the reasons discussed, the average hiring process just doesn’t cut it. The following chapters will teach you a new method called Evidence-Based Hiring. You will learn:

The common errors that people make when screening candidates.

Ways to overcome our biases.

The scientifically valid methods of screening.

How to automate much of your screening process.

The optimum position for a specific screening method in the hiring pipeline.

How to best communicate with candidates during the process.

The types of questions that you should ask to screen high-calibre candidates.

How to structure interviews.

How to measure the efficiency of your entire hiring process.

This book is focused specifically on everything which happens from the moment that you receive an application to the moment that you hire a candidate. So, out of this book’s scope are:

Sourcing applications: where to put your ads to find potential applicants.

Employee branding: whether you should offer bean bags, fruit baskets, and other creative ways to improve the public perception of your company.

Training and development: how to keep employees skilled, happy, and motivated.

CHAPTER 2: The Current Model Doesn’t Work Because We’re All Biased

Before we can fix what’s broken, we first need to understand why it’s broken and who broke it.

Let’s talk about intelligence for a moment. Try this brain-teaser:

A father and son are in a horrible car crash, which kills the father. The son is rushed to the hospital. Just as he’s about to go under the knife, the surgeon says, “I can’t operate—this boy is my son!”

How is this possible?

If you haven’t seen this puzzle, take no more than a minute to try and solve it. The answer is on the next page…

The answer is simple: the surgeon is the boy’s mother. Were you able to solve it, and how many seconds did it take you to find the answer?

This is a classic question to test for gender bias. When we imagine a surgeon, we tend to imagine a male surgeon. Research from Boston University shows only 14% of students are able to come up with the answer4. Students that identified as feminists were still only able to find the solution 22% of the time.

You might think that you don’t have a gender or race bias, but even people in an in-group can be biased against its other members. Psychologists Kenneth and Mamie Clark created “the doll tests”, where kids are given a choice of a few dolls with different skin tones5. African-American kids overwhelmingly choose white dolls, as they “are prettier” and “better.”

As adults, we can’t avoid our biases either. Whether on a date or in an interview, the first thing that you notice is the other person’s physical appearance. It’s regrettable, but it’s human nature. We are not biased because we are dumb or uneducated. We are biased because with a lack of other information, we resort to stereotypes to make a decision. That is usually intuitive and can’t be turned off. If you think you can, I invite you not to think of a polar bear. Ironic process theory states6 that deliberate attempts to suppress certain thoughts make them more likely to surface.

Screening by Proxy

When hiring, as we already discussed, we have a lack of information, and so we are likely to use proxies to make a decision:

If a person is good at X (proxy), they are probably going to be good at Y (work).

Popular screening proxies are; school prestige, years of work experience, having worked at a big name company, eloquence, self-confidence, appearance, punctuality, gender, and race.

Screening by proxy is not necessarily bad. When hiring, we must use proxies because we can’t know the results7.

There are better and worse proxies. Good proxies are supported by research and shown to lead to good hires—they’re signals, while other proxies are just noise.

Screening discrimination probably won’t be based on a single proxy. We tend to interview with a preconceived idea of what a stereotypical candidate for that position should look like. Let’s say I am hiring a surgeon and all candidates have equal qualifications. One candidate walks in for an interview and he is a tall, Caucasian male with a deep, assertive voice. He’s missing the stethoscope around his neck, but otherwise he’s the epitome of every TV ER doctor. When interviewing him, I’m reassured by this. The next candidate walks in and I’m surprised to see it’s a young woman with short, dyed blond hair, and a tattoo on her neck. She looks more like a punk-rock singer than a surgeon. The interview goes well, and her answers are good, but something in my gut just doesn’t feel right. A hidden part of my brain is telling me it has never seen a surgeon like this. Situations that are familiar make us relaxed, while situations that are unfamiliar make us stressed and uncertain. Meanwhile, another part of my brain is evaluating my social situation—what will my coworkers say if I pick such a strange candidate? Is it worth the risk?

This is how our hidden biases work:

If we don’t have enough information to decide between applicants, our emotions tell us to go with the most stereotypical candidate.

I am probably destined to choose the ER guy over the punk girl. It’s the safer choice. What do you think you would do?

Not only do people judge by proxy, they often judge by proxy of a proxy. For example, a common belief is that people who are nice to their dogs are nice to other people8. Double-proxy reasoning states that, if someone has a dog, they must have empathy and, therefore, they will be nice to everyone. A similar double proxy is that, if a man has a loving wife, he can’t be all that bad a human.

Sounds quite reasonable until you remember the guy in the next figure.

By all historical accounts, Hitler loved his dog Blondi and was a strong supporter of animal rights. The Nazis introduced many animal welfare laws, offenders of which were sent to concentration camps10. Hitler, himself, was a vegetarian11 and was planning to ban slaughterhouses after WWII because of animal cruelty. He was also a non-smoker and non-drinker. Hitler’s mistress, Eva Braun, loved him so much that she decided to share his faith. They got married in his underground bunker and, 40 hours later, they committed suicide together12.

We can trust that all these proxies didn’t give people a reliable read on Mr. Hitler, nor have we updated these proxies since. So, while there are slightly more women than men in the US, no woman has ever been elected president, neither has a Hispanic or Asian minority. These demographics, which represent 62% of society, have not been elected once in 58 presidential elections.

So, who gets votes? Well, all American presidents for the past 130 years, except one, had owned a dog13. All but two of them were married14. All but two were Christians15. All, except one, were white. No matter what people say they look for in a head of state, an older, white, religious male with a wife and a dog fits a stereotype of a good president.

The problem is not restricted to the average voter. When Albert Einstein graduated from ETH Zurich in 1900, both his professors of mathematics and physics refused to give him a job recommendation16. The professor of mathematics refused because Einstein skipped his math lectures. The professor of physics refused because Einstein called his lectures “outdated” and questioned why he didn’t teach modern theories. As a result, Einstein was the only one in his class of students who couldn’t find employment in his field. He was forced to work at a patent office, and only managed to get an academic job a few years after he published his special theory of relativity. If the main criterion for examination had been creating original physics papers, or if the testing of students was blind, it’s likely a young Albert could have shown what he had to offer. In the case of Einstein, his professors used a “did the student attend my lectures” proxy and a “did the student like my course” proxy.

It is important to realize that proxies are just proxies and they should not be taken into account without data proving their validity.

Proxies and Biases in Hiring

Let’s take a look at places where biases and incorrect proxies can derail a typical hiring process:

Résumé screening - résumés are stuffed with proxies which candidates have intentionally included to look better, and that can trip our biases. Candidates of the right age will put their birthdate, others will omit it. Attractive candidates will attach their photo, the others will not. Prominent schools and well-known companies will be put at the top, even if the candidate was not the best student or employee there. Some candidates’ “personal achievements” sections will list charity work, Boy Scouts, Mensa membership, or other proxies-of-a-proxy.

The telephone interview - here, we tend to ask candidates very simple questions which are related to their previous experience and résumé. Delivered this way, it is not a knowledge or work test. Instead, it’s more of a short communication skills test that extroverts will ace. Even if the job requires communication skills, this doesn’t mean a phone call is a good proxy for work-specific communication.

Interviews - Most interviewers base their decisions on subjective feelings, which research shows are largely influenced by a 20-second first impression17.

Giving candidates tasks on interviews - here, bias is introduced when we watch a candidate solve a task. It immediately puts them under pressure, which we often want for our “performs well under stress” proxy, but it’s an artificial situation that they’re unlikely to be faced with when hired.

Standardized testing - although standardized testing is good, bias is introduced when we decide what to test. If we ask questions that require experience, then we bias against young graduates that may otherwise be great employees. If we administer verbal reasoning tests, we bias against non-native speakers.

We can’t rely on hunches or biases when hiring. We need to be both methodical and scientific. Fortunately, there is a great deal of hiring research that tells us which methods work and which, well, don’t.

CHAPTER 3: Not All Methods Are Equal

Since we’re all biased and we use incorrect proxies, why not just outsource hiring to experts or recruitment agencies? After all, they’ve been screening people for many years, so they must know how to do it right?

Not really. I was surprised to discover that many experts disagree with each other. Everybody praises their pet method and criticizes the others. Many of these methods look legitimate, but are based on fake science.

Hiring Science Gone Wrong

Fake science looks similar to real science, with impressive-looking studies, charts, and numbers—until you dig deeper into what those numbers actually represent.

For example, let’s look at popular personality tests. HR departments love personality tests, because they are universal—they need just one test for all candidates.

Many companies claim that their personality test has high statistical reliability18 (when a candidate repeats the test they get a similar result). That doesn’t mean a thing. A person’s height has a high “reliability” because it doesn’t change from day to day. That doesn’t mean it is good for hiring—well, unless you’re hiring a basketball player.

It’s the same when companies claim high face validity (the candidate agrees that the results accurately represent them). Horoscopes have high face validity19, but we wouldn’t think of only hiring Libras because they are supposedly curious and outgoing.

One of the oldest personality profiling system is Myers–Briggs. According to The Myers-Briggs Company, “88% of Fortune 500 companies use it in hiring and training”20. Myers–Briggs sorts people into 16 archetypes21 that have fancy names, like “Architect” and “Commander.” More than 20 studies concluded that Myers-Briggs doesn’t work22. Myers-Briggs Type Indicator (MBTI) is not even reliable, as 50% of people get a different Myers-Briggs archetype on repeated testing23. Forbes concluded that, “MBTI is so popular because it provides the illusion of solution24.” The New York Times called Myers-Briggs “totally useless” and concludes that it is popular because people like to put themselves and others into categories25.

Another popular assessment is DiSC26 (Dominance, Influence, Steadiness, and Conscientiousness). DiSC results are not normalized27, meaning that you can’t compare different people with it. Some assessment vendors, like People Success, claim that DiSC “has a validity rate of between 88% and 91%”28. Validity around 0.9 would be a spectacular result for any pre-employment method, on par with with a crystal ball. But, the vendor failed to mention that it was just face validity29.

Generally, modern psychology has debunked the idea that people fall into certain “types.” Personality traits come in a spectrum—for example, people are distributed on a scale from introversion to extroversion. Traits are not binary. And, the traits that people actually have are not the ones claimed by Myers-Briggs or DiSC.

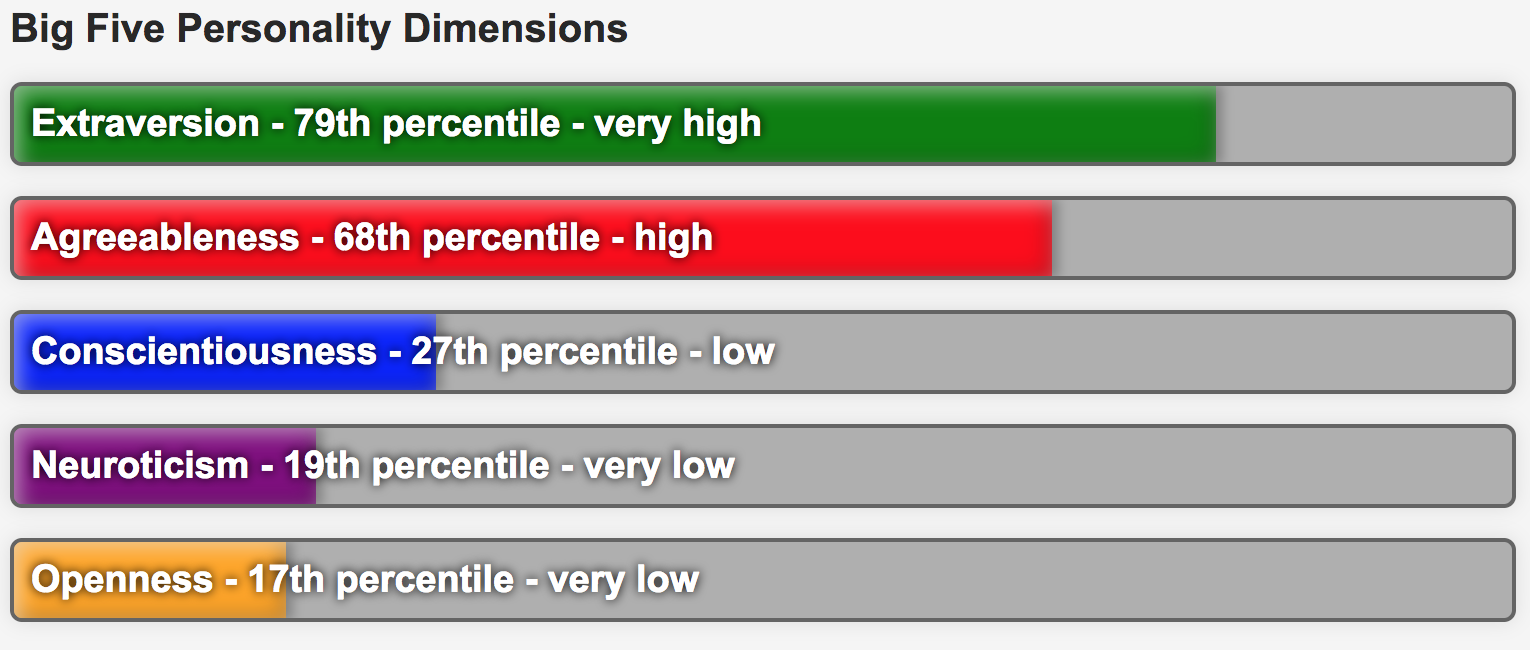

A personality test that is scientifically proven is the Big Five30, which measures openness, conscientiousness, extraversion, agreeableness, and neuroticism. The Big Five doesn’t classify people as a certain “type”, merely offers percentage scores for each trait, see the next figure.

While scientific, the Big Five still gives weak predictions. A paper by Morgeson et al.31 summarizes multiple studies and concludes that only one of its variables (conscientiousness) is worth looking at, and even that is weakly correlated.

The Ultimate Measure: Predictive Validity

The only statistical measure that we should look for is predictive validity32. This is different to face validity and reliability because it actually measures how well the candidate did at their job after being hired.

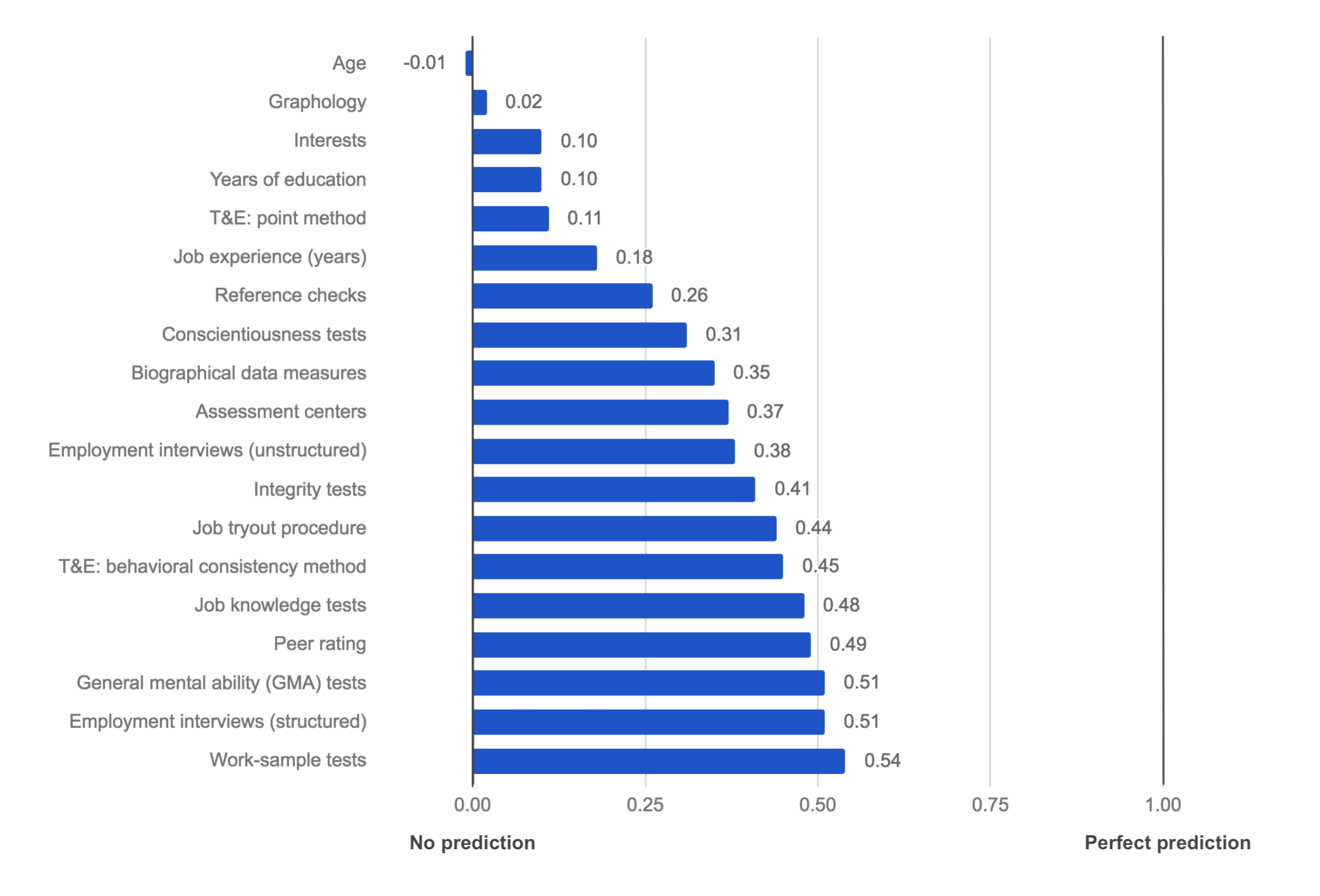

Predictive validity is very hard to calculate. One would need to follow up a few years after the hire to correlate actual job performance with the original screening scores. Fortunately, there’s research that shows which screening methods have high predictive validity. Schmid and Hunter33 did a meta-analysis of thousands of studies and compared different screening methods. Their conclusions are in the next figure.

Predictive validity is a range from -1.0 (negative correlation) to 1.0 (positive correlation). The higher the number, the more often we were right about whether someone was a good hire. A screening method with a 1.0 predictive validity would find a great hire every time.

Now, let’s go through the rankings, from worst to best.

Low Validity Methods

These methods definitely shouldn’t be used, because they have validity below 0.2 and, so, are about as good as asking Mystic Mahjoob, the local fortune teller:

| Method | Validity | Description |

|---|---|---|

| Age | -0.01 | Candidate age has non-existent validity, so companies that discriminate against candidates that are “too young” or “too old” should stop doing so—not only because it’s illegal in most countries, but it’s also just plain invalid. |

| Graphology | 0.02 | Analysis of a candidate’s handwriting is advertised as a way to detect their personality. It’s popular in Western Europe, especially in France where 70-80% of companies still use it34 (no, I’m not making this up). This is surprising, since it has no validity and even the graphology wiki page35 states it is a pseudoscience. |

| Interests | 0.10 | Interests are a common part of a résumé that you can safely ignore, as they have very low validity. |

| Years of education | 0.10 | Surprisingly, requiring more education for a job has very little validity. In other words, slapping a PhD requirement on a job that could be done by an engineer with just a bachelor’s degree doesn’t significantly improve the quality of your hires. |

| Training and experience: point method | 0.11 | This method gives points for candidate’s training, education, and experience, multiplied by the length of time in that activity36. It can be calculated manually from a résumé or automatically from the application form. Point scoring is popular in government hiring, but, today, even government websites point out that it “does not relate well to performance on the job.”37 |

| Job experience (years) | 0.18 | Years of experience in the job correlates only slightly with the job performance, and, thus, should not be used. It’s often a deciding factor since it’s easy to determine from a candidate’s résumé and, somehow, feels, intuitively, like it should be valid—it isn’t. |

Medium Validity Methods

The methods below have validity between 0.2 and 0.45 and can be used in addition to high validity methods:

| Method | Validity | Description |

|---|---|---|

| Reference checks | 0.26 | Contacting previous employers is a valid method, as past performance predicts future performance. Unfortunately, it is often not possible for a current job, as previous employers are usually reluctant to share that information and it is illegal in some countries. |

| Conscientiousness tests | 0.31 | Conscientiousness is a measure of a specific personality trait and can be asked during an interview. It highlights candidates with a high level of self-discipline. |

| Biographical data measures | 0.35 | Many life experiences (such as school, parenting style, hobbies, sports, membership in various organisations, etc.) are scored based on statistical data from past hires. Quite hard to develop, but easy to use thereafter. Note that sex, age, and marital status are illegal to use. |

| Assessment centers | 0.37 | Assessment centers use a variety of methods, including personality and aptitude tests, interviews, and group exercises. They are popular with companies, which is unfortunate because their validity is low for such a time-intensive, multi-method approach. |

| Employment interviews (unstructured) | 0.38 | A normal interview where there is no fixed set of questions. It is enjoyable for both a candidate and an interviewer, as it feels like a friendly chat, but doesn’t have the validity of a more structured interview. |

| Integrity tests | 0.41 | These tests can either ask candidates directly about their honesty, criminal history, drug use, or personal integrity, or draw conclusions from a psychological test. Again, conscientiousness is the most important personality trait. |

| Job tryout procedure | 0.44 | Candidates are hired with minimal screening and their job performance is monitored for the next three to six months. This method has reasonable validity, but it is very costly to implement. |

| Training and experience: behavioral consistency method | 0.45 | First, companies identify Knowledge, Skills and Abilities (KSA38) that separate high performers from low. Candidates are then asked to give past achievements for each KSA. Responses are scored based on a rating scale. |

High Validity Methods

Only five methods have a validity above 0.45:

| Method | Validity | Description |

|---|---|---|

| Job knowledge tests | 0.48 | Question the specific professional knowledge required for the job. This method has high validity, but can pose a problem when screening junior candidates who require training. |

| Peer rating | 0.49 | Asking coworkers to evaluate a candidate’s performance and averaging the results is surprisingly valid. People inside a company have a better insight of each other’s abilities. A good method for in-company promotion or reassignment, but not for hiring outside employees. |

| General mental ability (GMA) tests | 0.51 | GMA tests measure the ability of a candidate to solve generic problems, such as numerical reasoning, verbal reasoning, or problem solving. They don’t guarantee that a candidate has the required skills, just the mental capability to develop them if trained. Note that brainteasers like “how would you move mount Fuji?” are too vague and subjective for a GMA test. |

| Employment interviews (structured) | 0.51 | The same interviewer asks different candidates identical questions, in the same order, writes down the answers (or, even better, records the entire session), and gives marks to each answer. This way, different candidates can be transparently compared using the same criteria. |

| Work-sample tests | 0.54 | To test if a candidate will be good at work, give them a sample of actual work to do. A simple and very effective idea. |

As these methods have the highest validity, they should be the core of our Evidence-Based Hiring process, and, so, logically, they’re what we will focus on in the rest of this book.

The Multiple Methods Approach

Based on the above research, we can conclude that:

No single method of screening has high enough validity to be used exclusively, so it is necessary to combine multiple methods.

There simply is no silver bullet for hiring. No simple signals that you can detect in a résumé and decide if a candidate is going to be a star hire. However, we do now know what methods to combine to get the highest validity for the least time, effort, and cost; work-sample tests, knowledge tests, GMA, and structured interviews.

CHAPTER 4: Reward vs Effort

The Last Question

So, we need to use multiple methods. But, in which order?

Say that you apply for a Sales Manager job at the Acme Corporation. First, they conduct a phone interview. Then, they call you for a face-to-face interview. After that, you visit for a whole day of testing: aptitude tests, situational tests, personality tests, the whole shebang. The Friday after that, you have an on-site interview with your prospective manager. Ten days later, you have an evening interview with his manager. More than a month has passed since you applied, and you’re five rounds in. Annoying, since you’re currently employed and need to make excuses to go for each interview or test. Finally, they send you an email stating they want to make you an offer. Again, you come to the shiny Acme Corporation office for a final talk with both managers. They offer you a salary you are satisfied with—great. You wipe the sweat from your forehead and relax into your chair.

Just one more question, your would-be future boss asks, “Tu español es bueno, ¿no?”

“Erm. Sorry, what?” you say, flustered.

“You don’t speak Spanish?” he replies. “Oh, maybe we forgot to mention it, but you’ll be working a lot with our partners in Mexico. So, we need someone fluent in Spanish. Sorry.”

What would you do in this situation? I would probably take the nearest blunt object and launch it at their heads. How could anyone forget such a simple requirement as “fluent in Spanish”? All those afternoons wasted, for both you and them, just because they forgot to ask one question.

This story would be funnier if a similar thing didn’t happen to me, both as a candidate and as an interviewer. It is a typical example of a minimum requirement which takes less than a minute to check, yet it wasn’t checked until the very end of the hiring process.

How you order your screening process makes all the difference.

Reward vs. Effort Ratio

When I started doing screening, I didn’t understand this. At the beginning of the process, I did both all the things that were traditionally done first and the things that were important to me. Everyone else began by screening résumés, so I did that too. Meeting a candidate for an interview was important to me, so that was a logical second step. I understand, now, that I was completely wrong in the ordering.

A screening process is a kind of funnel that collects information at each step which can predict future behavior. But, collecting information has a cost in both time and money. In other words:

Screening_Efficiency = Reward / Effort

Accordingly, the screening process should be reordered, so that the most efficient methods come first. Higher efficiency methods give the most valid information to make a decision, saving time for both you and the candidate.

In the Acme example, an on-site interview gives more information than checking Spanish fluency, but an interview takes hours of managers’ and candidates’ time. Checking Spanish fluency gives less information than an on-site interview, but it requires only a one-minute phone call to know for sure whether you can reject them. It is vastly more efficient.

Why the Traditional Model Is Not Efficient

Let’s examine effort and reward in the traditional screening process for both candidates and employers. Here’s how the Acme Corporation process for an average candidate might look:

| Step | Company effort | Candidate effort | Reward | Validity |

|---|---|---|---|---|

| Application form, résumé, and a cover letter | 5 min. | 25 min. | Does the candidate satisfy the basic requirements? | Low |

| Phone screen | 20 min. | 15 min. | Can the candidate communicate well and answer promptly? | Low |

| First interview (unstructured) | 45 min. | 45 min. interview + 1 hour commute | Find out more about the candidate’s education, work experience, and style of communication. | Medium |

| On-site assessment and knowledge test | 4 hours | 4 hours of assessment + 1 hour commute | Candidate score on personality test, aptitude tests, knowledge test, and interview with a psychologist. | Medium |

| Second interview with two managers (structured) | 2x1 hour | 1 hour interview + 1 hour commute | Details about the candidate’s past behavior, experiences, strengths, and weaknesses, in a form that can be compared to other candidates. | High |

| Job offer (phone) | 5 min. | 5 min. | Will the candidate accept the terms? | |

| TOTAL: | 7 hours 15 min. | 9 hours 30 min. |

As you can see, a huge amount of time is invested in each application by both the company and candidate.

The problem occurs when you look at the last column. The point of screening is to collect the maximum reward (information) for the least effort (time). The first steps don’t give much useful information at all. A résumé tells us years of education or interests (validity of 0.1) and job experience (validity of 0.18). A short, fifteen-minute phone screening also has low validity. A company must invest more than five hours to get their first piece of high-validity information from the knowledge test. So, why structure things this way around?

The traditional approach is popular because, first, most people don’t question the way things have always been done. Secondly, because people are uncomfortable asking tough questions. Most companies spend the first few hours with “soft” topics (conversation and personality tests) before they go to the “hard” topics (testing knowledge and asking direct questions).

This makes sense for dating, because we often enjoy the dating process. But, it doesn’t make sense for hiring —because both an interviewer and a candidate would rather be elsewhere. Being direct saves effort for both.

If a candidate passes the entire process, it takes 7 hours and 15 minutes of company time. Let’s look at how much time could we save by eliminating them earlier:

| Elimination Step | Invested company effort | Time efficiency |

|---|---|---|

| Candidate passes all stages | 7 hours 15 minutes | 1x |

| Candidate fails the knowledge test | 5 hours 10 minutes | 1.4x |

| Candidate fails the phone screen | 25 minutes | 17.4x |

| Candidate fails application form questions | 5 minutes | 87x |

Eliminating a candidate from an application form is 87 times more time efficient (5 minutes vs 7 hours 15 minutes), and that’s before you multiply this again by the number of candidates. If you have 20 candidates and an average applicant takes five hours to screen, failing after the knowledge test, the total effort is a huge 100 hours.

Surprisingly, real-world companies cover the entire spectrum of efficiency. For example, one government agency which I know of would put every applicant through the almost full procedure, so they would be close to 1x. Needless to say, they have a large HR department. At the other end of the spectrum, some high-tech companies have automated screening, so that they can screen thousands of candidates with very little effort.

What is the efficiency of your company? How much, on average, does it cost you (in time and money) to eliminate a candidate?

Recruiters often say that they are very efficient, because they eliminate the majority of candidates from their résumé alone. But, if 90% of candidates are eliminated based on low-validity criteria, then that process can be summarized with this joke:

A recruiter splits a stack of job applications, and throws a half of them in the garbage. “What are you doing?!” asks his coworker. “Well,” answers the recruiter, “we don’t want unlucky people in our company, do we?”

Ways to Reduce Effort & Increase Reward

There is one ingenious idea rarely mentioned in screening resources: find a person who knows the candidate the best, and convince them to do a screening—for free. That person is the candidate themself, and the method is candidate self-selection. Candidates don’t want to be failures: they want to succeed in their job. If your job description indicates that they are probably going to fail if they were hired, they’re not going to apply.

When advertising normal products, you want to sell to as many people as possible. But, when advertising jobs, you want to “sell” it to the right people for the job—and scare away everybody else.

I realized this the first time that my company was advertising a managerial position. Our previous job ad was for a developer and we had 30 applicants. But, when we needed a manager, which was a more demanding job, more than 140 people applied. The problem was that our developer job ad was very specific: we listed languages and technologies which candidates needed to know. The manager’s job ad was more general, and everybody thinks they would be a great manager. It didn’t have specific criteria that candidates could use for self-elimination.

Whenever you have a job ad that is too general, the quality of candidates will decrease. Therefore, you must always provide a realistic picture of what the job actually entails, and your selection criteria for getting it. If your job requires frequent weekend trips, say so. If the necessary criteria is at least two years of experience managing five people, say that loudly, in ALL CAPS if you must. It’s saving everyone’s time.

If the job description is too unspecific, you will end up with hundreds of candidates but run the risk of your hire quitting after six months because the job was “not what they were expecting.”

Sounds obvious, but I used to make the mistake of leaving out one crucial part of the job description, just because other companies omitted it too—the salary range. While I knew exactly what salary range my company could afford, salary negotiations are difficult and awkward, so I left them for the end. As a result I spent hours and hours interviewing some senior candidates, only to discover that—aside from a unforeseen lottery win—I could not afford them. I wasted their and my time.

The second way to decrease effort significantly is to employ the magic of automated testing. Here, the computer can screen hundreds of candidates for near-zero cost, once we’ve invested the one-time effort of developing a good automated test.

The third way to decrease effort is to divide testing into two parts—short tests early in the screening process and longer tests later. Most candidates will fail the first test anyway, so we will save time by not doing longer, unnecessary tests with them. This also saves time for candidates, as they only need to take the longer test if they are qualified.

To increase the reward, we need to use high-validity methods as early as possible. Every method in the screening process asks the applicant to answer questions. These can be as simple as the “Name” field on the application form, as complex as answering a mathematical problem in a structured interview, or as straightforward as stating whether they will accept a starting salary of $65,000. Our rewards are the answers to these questions. They tell us whether to send the applicant further down the funnel or back home.

Every method, whether it has high or low validity, has one thing in common—it’s only as good as the questions asked within it. So, the next chapter is dedicated solely to the art of asking good questions.

CHAPTER 5: Maximizing the Reward: How to Ask Good Questions?

The general rule for screening questions in both tests and interviews is simple, but very powerful once you’ve wrapped your head around it:

Only ask a question if it can eliminate the candidate in the current screening round.

After all, if it can’t provide actionable evidence, why should you waste time on it? Of course, you can always ask questions like, “What are your hobbies?” but, if one candidate answers “football” and the other “sailing,” what can you do with that information? How does it inform your hiring decision? Are you going to reject the candidate who prefers football? Interviewers often say that such questions help provide a “complete picture” of a candidate, but previous chapters have explained why that’s nothing more than a back door for bias.

It’s the same with easy questions, asking a qualified accountant the difference between accounts payable and receivable won’t provide any actionable information. Of course, in addition to being eliminatory and moderately hard, a question also needs to have predictive validity. We don’t want to eliminate 90% of candidates for being right-handed, no matter how good our last left-handed employee was.

Great Questions Cheat Sheet: Bloom’s Taxonomy

Fortunately, there’s one high-level concept that you can learn in 15 minutes, which will make you much better at asking elimination questions.

In every domain, there are different levels of knowledge. Memorizing the formula E=mc2 shows some knowledge but doesn’t make you a physicist—that might require applying E=mc2 to a specific, related, problem.

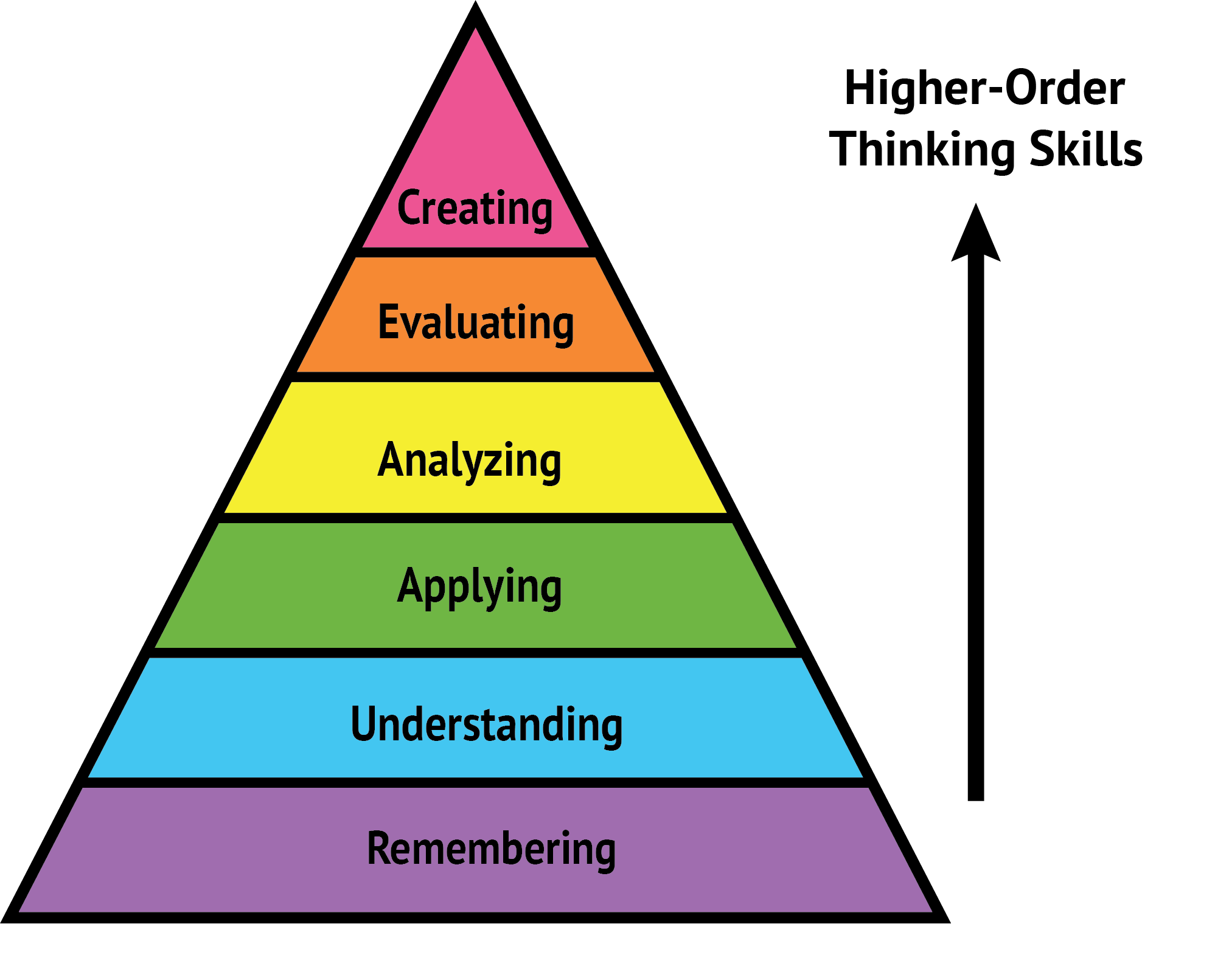

The American psychologist Benjamin Bloom categorized these different levels of knowledge into hierarchy we now call Bloom’s Taxonomy39, see the next figure.

At the bottom of the pyramid are lower-order thinking skills; remembering, understanding, and applying. At the top of the pyramid are higher-order thinking skills; analyzing, evaluating, and creating. Creating is the hardest skill for any profession and the smallest number of candidates can do it.

For example, here is how Bloom’s taxonomy is applied to testing foreign-language proficiency:

Remembering: recall a word in a foreign language.

Understanding: understand text or audio.

Applying: use a language to compose an email or to converse with people.

Analyzing: analyze a piece of literature.

Evaluating: discuss which book has better literary value.

Creating: write a poem, essay, or fictional story.

Bloom’s taxonomy is an important tool for your screening because:

The higher the question in the hierarchy, the more domain knowledge the candidate needs to demonstrate.

However, not all tasks require higher-tier knowledge. For example, if you are hiring a Spanish-speaking customer support representative, you need someone who applies the language (level 3). So, while it might seem better to have a candidate who can also create poetry in Spanish (level 6), we need to consider if they will get bored doing a job below their knowledge level. Therefore:

The elimination questions that we ask should match the skill level required for the job.

If the test is too low in the hierarchy, then it is easier for both the administrator and the test taker—but it completely loses its purpose. Unfortunately, both job screening and academic tests often get this wrong. In education, schools want most of their students to pass. Often rote memorization is all you need. But, the fact that you’ve memorized which year Napoleon was born doesn’t make you a good historian—doing original research does.

Let’s see examples of automated questions for every level of Bloom’s taxonomy, and how to automate scoring for each step.

Level 1: Remembering

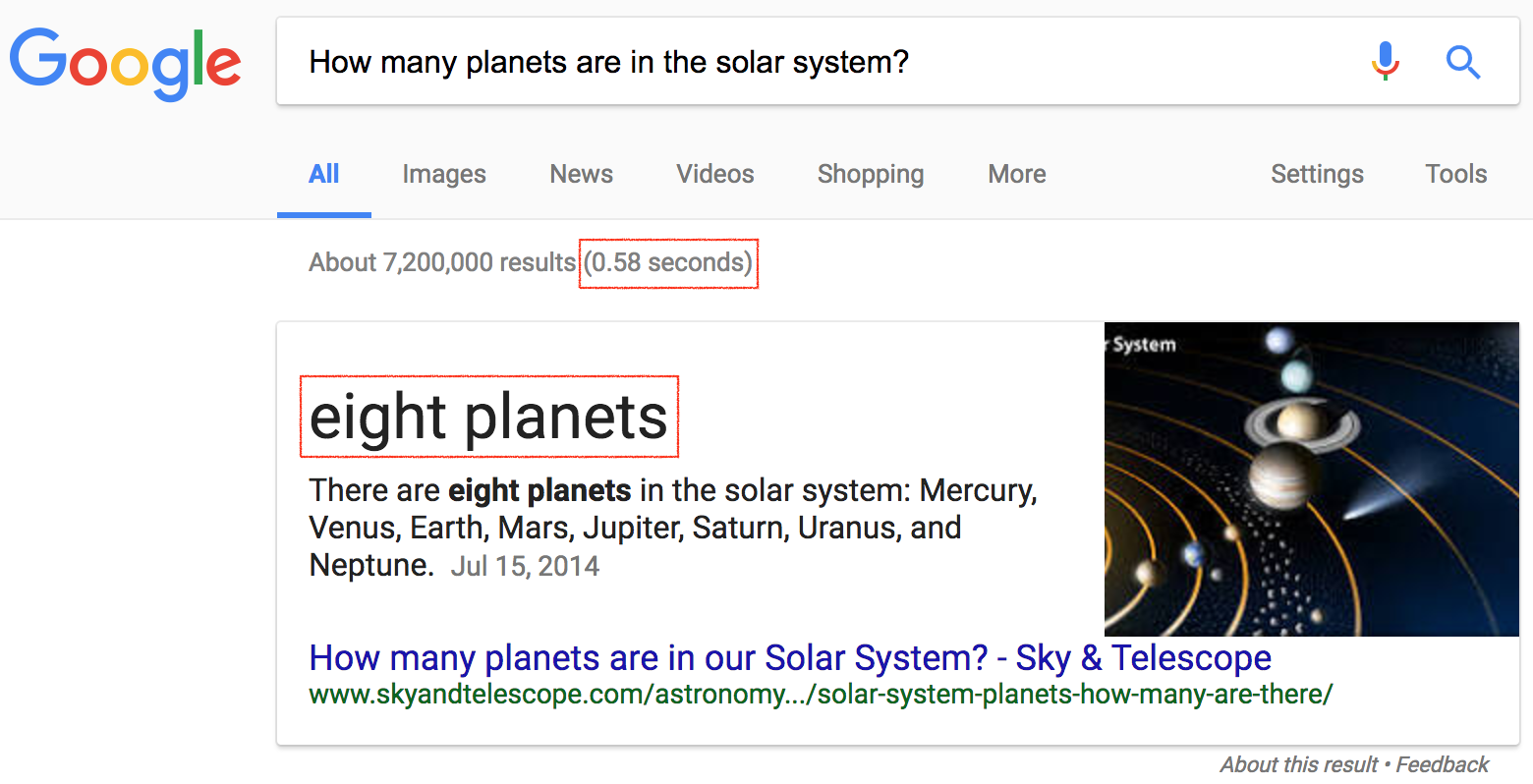

Let’s suppose you are hiring an astronomer. You could test basic knowledge with the following question:

How many planets are in the solar system?

7

8 (correct)

9

10

11

This is often called a trivia question—recalling bits of information, often of little importance. In my modest opinion, using such questions in screening should be banned by law. Why?

First, trivia questions test simple memorization, which is not an essential skill for 21st century jobs.

Second, since memorization is easy, test creators often try to make it harder by adding hidden twists. The hidden twist in the question above is that there were nine planets until 2006, when Pluto was demoted to a dwarf planet. A person who knows astronomy, but was on holiday when news of Pluto’s dismissal was going around, would fail this question. On the other hand, a person who knows nothing about astronomy but just happened to be reading a newspaper that day, back in 2006, would answer correctly.

Third, trivia questions are trivial to cheat. If you copy-paste the exact question text into Google, you will get an answer in less than a second, see the next figure.

This is not only a problem for online tests: it’s also trivial to cheat in a supervised classroom setting. All you need is a smartphone in a bag, a mini Bluetooth earphone bud40, and a little bit of hair to cover your ear. It doesn’t take a master spy. How can you know if a student is just repeating a question to themself or talking to Google Assistant?

For some reason, many employers and even some big testing companies love online tests with trivia questions, such as “What is X?” They think having copy-paste protection and a time limit will prevent cheating. In my experience, they merely test how fast a candidate can switch to another tab and retype the question into Google.

Which brings us to the last problem which results from badly formulated trivia questions: candidate perception. If you ask silly questions, you shouldn’t be surprised if the candidate thinks your company is not worth their time.

Level 2: Understanding

How can you improve the Pluto question? You could reframe it to require understanding, not remembering. For example, this is better:

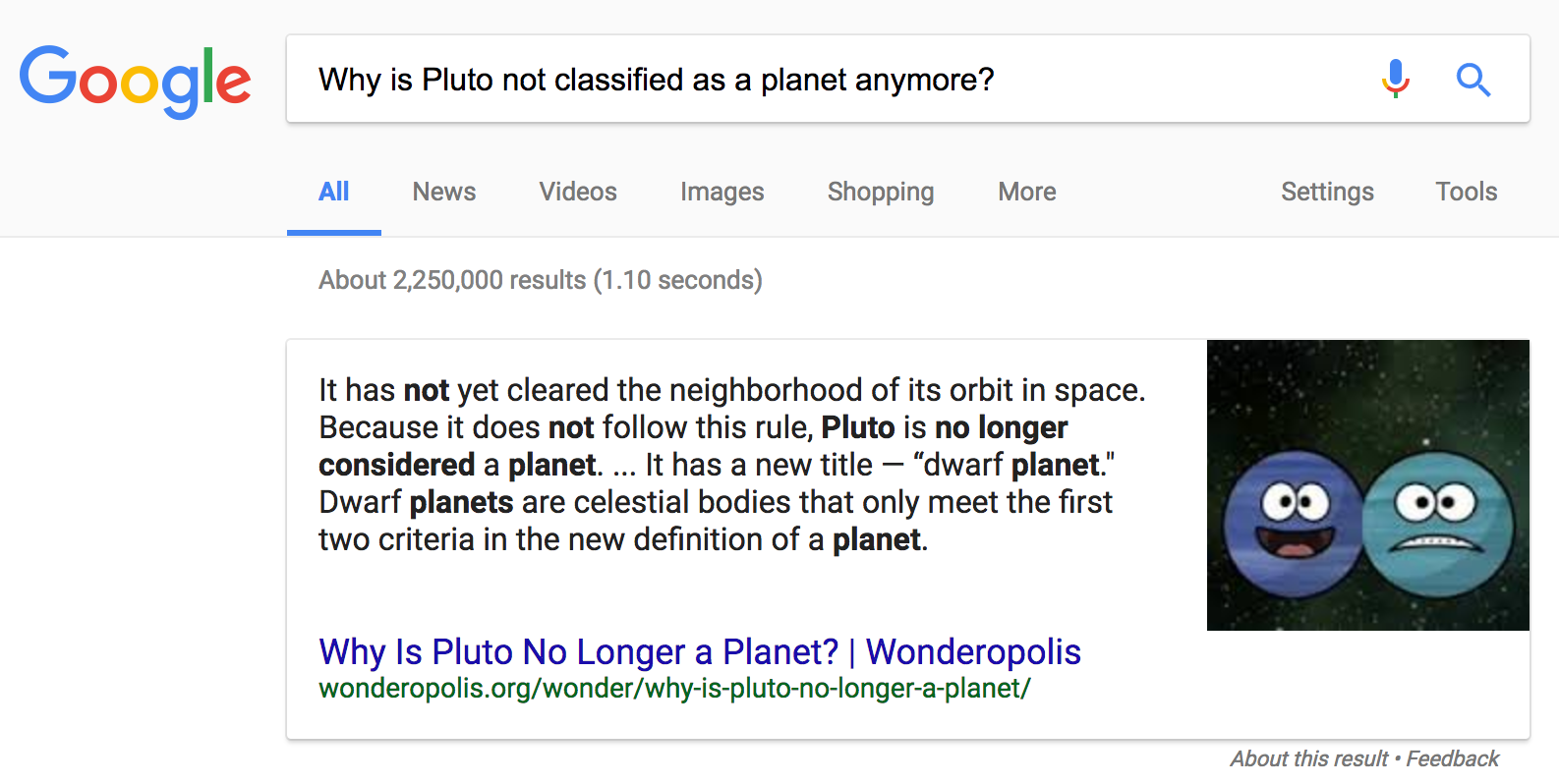

Why is Pluto no longer classified as a planet?

It is too small.

It is too far away.

It doesn’t dominate its neighborhood. (correct)

We have discovered many larger objects beyond it.

All the options offered are technically correct: Pluto is small, far, and astronomers have discovered many large objects beyond it. But, that is not the reason for its reclassification. You would need to understand that astronomers must agree on three criteria for a planet, and Pluto doesn’t satisfy the third one—dominating its neighborhood.

However, just like level-1 questions, even a rephrased formulation above can be solved with a quick Google search, see the next figure.

A smart candidate will deduce the right answer after reading the first search result. It would take a little more skill to do so, which is good, but not very much, which is not.

However, there is a trick to improving level-2 questions even further:

If the question is potentially googleable, replace its important keywords with descriptions.

In the example above, the Google-friendly keyword is “Pluto”. If we replace it with a description (celestial body) and decoy about another planet (Neptune), we get a better question:

Recently, astronomers downgraded the status of a celestial body, meaning that Neptune has become the farthest planet in the solar system. What was the reason for this downgrade of that celestial body?

It is too small.

It is too far.

It doesn’t dominate its neighborhood. (correct)

We discovered many large objects beyond it.

Currently, the first result Google returns for this question is completely misleading41. Therefore, with a time limit of two minutes, this question can be part of an online screening test. However, Google is always improving and, in a year, it’ll probably be able answer it. So, let’s look at better questions to ask, further up Bloom’s taxonomy.

Level 3: Applying

Let’s presume that our imaginary candidates are applying to work at an astronomer’s summer camp. They need to organize star-gazing workshops, so the job requirement is to know the constellations of the night sky.

One approach would be organizing a dozen late-night interviews on a hill outside of the city, and rescheduling every time the weather forecast is cloudy.

However, there’s a much easier way—we just test if the candidates can apply (level 3) their constellation knowledge with a simple multiple-choice question:

Below is a picture of the sky somewhere in the Northern Hemisphere. Which of the enumerated stars is the best indicator of North?

A

B

C

D

E

F (correct)

This question requires candidates to recognize the Big Dipper42 and Little Dipper43 constellations, which helps to locate Polaris (aka The North Star). The picture44 shows the real night sky with thousands of stars of different intensities, which is a tough problem for a person without experience.

Because the task is presented as an image, it is non-googleable. A candidate could search for tutorials on how to find the North Star, but that would be hard to do in the short time allotted for the question.

This level of question is not trivial, but we are still able to automatically score responses. In my opinion, you should never go below the apply level in an online test. Questions in math, physics, accounting, and chemistry which ask test-takers to calculate something usually fall into this apply category, or even into the analyzing category, which is where we’re going next.

Level 4: Analyzing

Unlike the apply level, which is straightforward, analyzing requires test-takers to consider a problem from different angles and apply multiple concepts to reach its solution.

Let’s stick with astronomy:

We are observing a solar system centered around a star with a mass of 5x1030 kg. The star is 127 light years away from Earth and its surface temperature is 9600K. It was detected that the star wobbles in a period of 7.5 years, with a maximum star wobbling velocity of ±1 m/s. If we presume that this wobbling is caused by a perfectly round orbit of a single gas giant, and this gas giant’s orbit plane lies in a line of sight, then calculate the mass of this gas giant.

2.41 x 1026 (correct)

8.52 x 1026

9.01 x 1027

3.89 x 1027

7.64 x 1028

Easy, right? There are a few different concepts here: Doppler spectroscopy, Kepler’s third law, and a circular orbit equation. Test-takers need to understand each one to produce the final calculation45. Also, note that star distance and surface temperature are not needed, as in real life, the candidate needs to separate important from unimportant information.

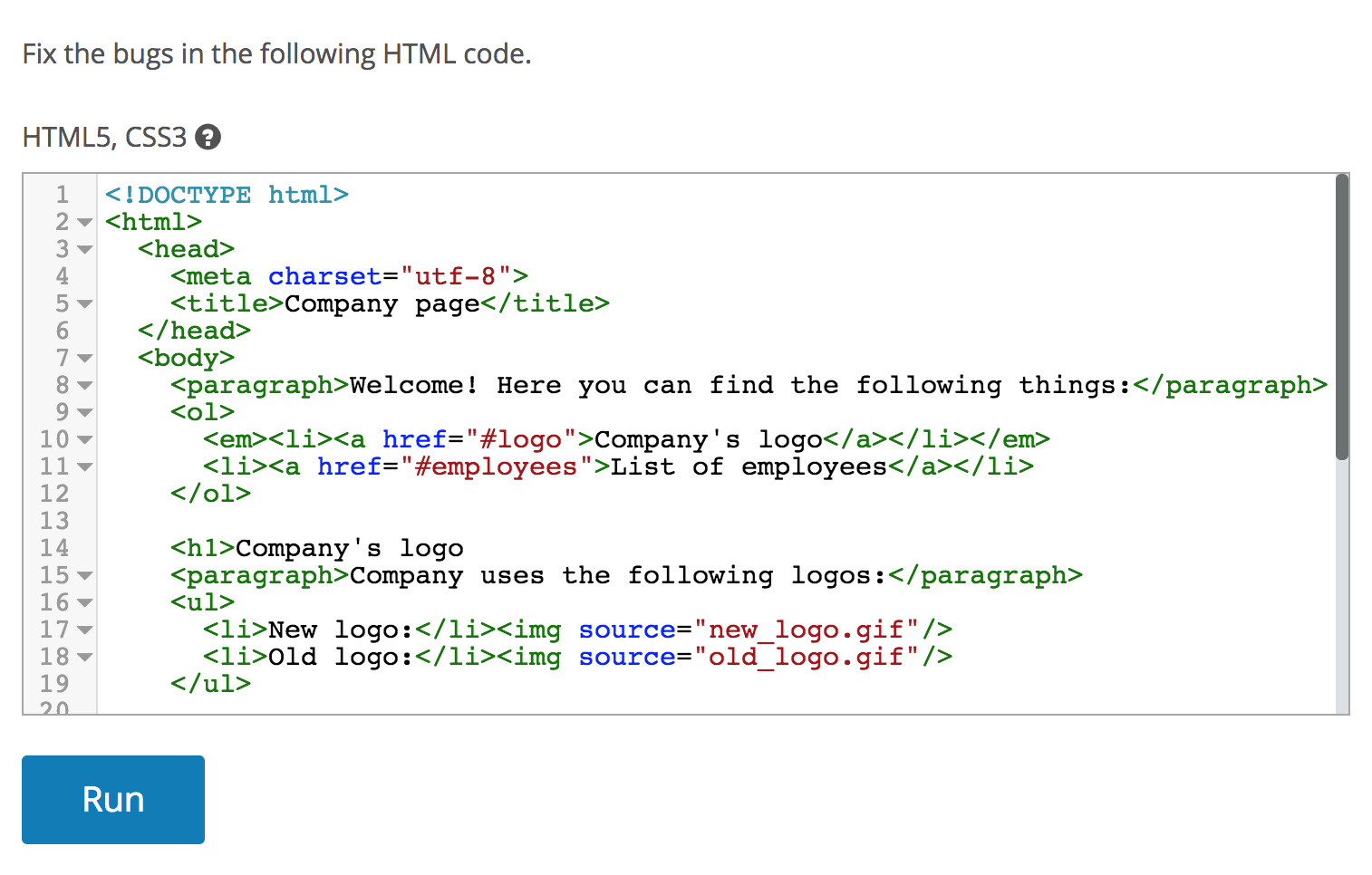

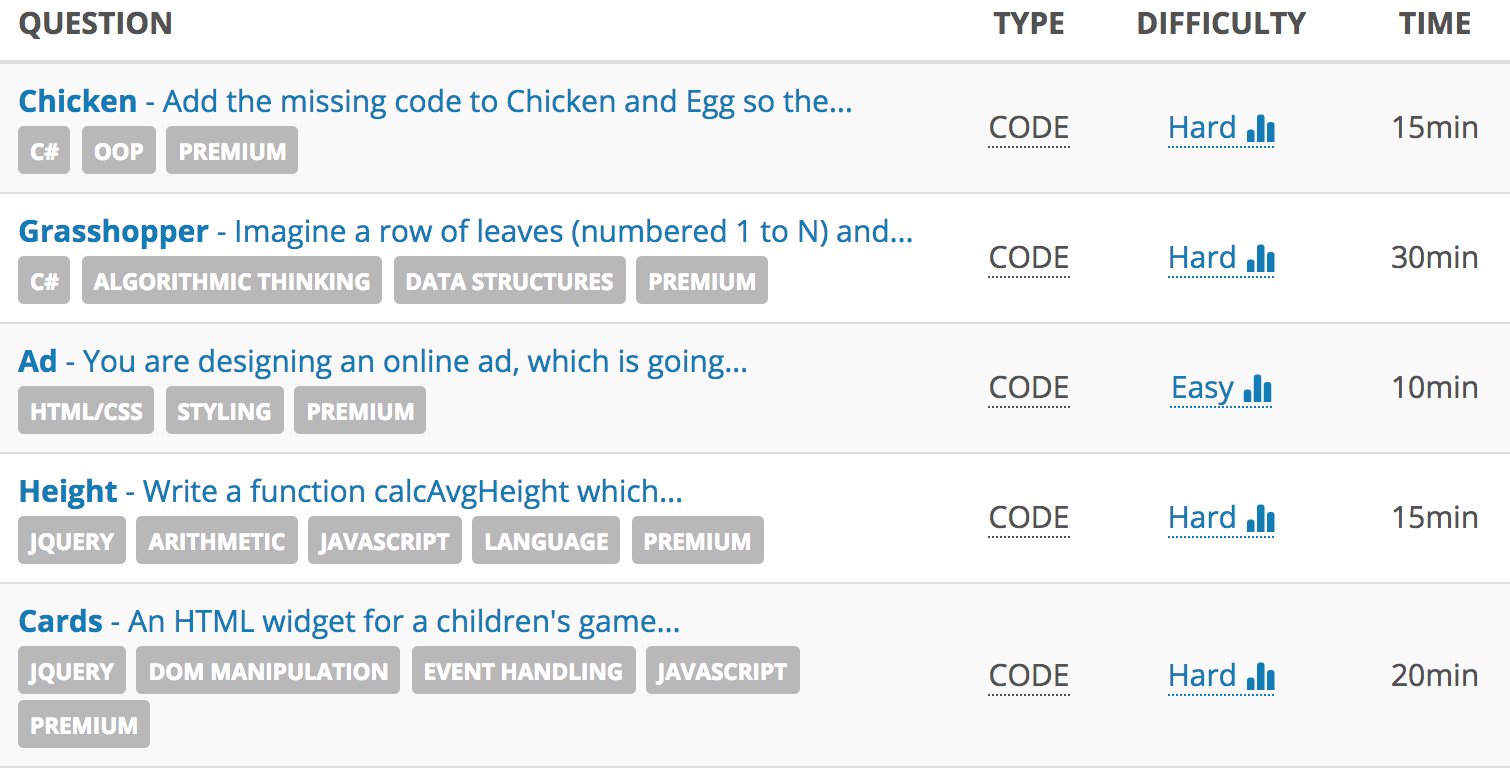

If the example above was a bit complicated, this second one is more down-to-earth, see the next figure.

The above text box contains HTML with four errors and a Run button which provides feedback to the candidate on the debugging progress. As fixing invalid code is the bread and butter of front-end development, this kind of task easily filters out candidates with no HTML experience.

Of course, we’ve now moved beyond the realms of multiple-choice answers, which means marking this type of question is more difficult. But, it’s still possible to automate it. You need to write something that checks the output of a piece of code, called a unit test47. Today, a few platforms provide a testing service where you can upload your own code and unit tests to check them.

Level 5: Evaluating

The next level, evaluating, demands more than merely analyzing or applying knowledge. To be able to critique literature or architecture, you need to have a vast knowledge of the subject to draw from. Typical questions in the evaluating category might be:

Do you think X is a good or a bad thing? Explain why.

Judge the value of Y?

How would you prioritize Z?

While great in theory, scoring answers to evaluating-level questions is difficult and subjective in practice. Reviewing a candidate’s answer would require a lot of domain knowledge, and would still be subjective. If a candidate gave a thorough explanation as to why the best living writer is someone who you’ve never heard of, let alone read, would you give them a good or bad score?

In the end, it is highly opinionated. That means that it’s not easy to measure knowledge, and highlights:

How strongly a candidate’s opinion matches those of the interviewer. “She also thinks X is the best, she must be knowledgeable!”

How eloquent the candidate is. “Wow, he explained it so well and with such confidence, he’s obviously very knowledgeable!”

Unless you want your company to resemble an echo chamber or a debate club, my advice is to avoid evaluating questions in tests and interviews. Because of the problems above, Bloom’s taxonomy was revised in 200048. We’re using the revised version, with evaluating demoted from level 6 (the top) to to level 5. Let’s go meet its level-6 replacement:

Level 6: Creating

Creating is the king of all levels. To create something new, one needs not only to remember, understand, apply, analyze, and evaluate, but also to have the extra spark of creativity to actually make something new. After all, it’s this key skill that separates knowledge workers from other workers.

Surprisingly, creating is easier to check then evaluating. If a student is given the task of creating an emotional story, and their story brings a tear to your eye, you know they are good. You may not know how they achieved it, but you know it works.

Accordingly, creating-level tests are often used in pre-employment screening, even when they need to be manually reviewed. For example:

Journalist job applicants are asked to write a short news story based on fictitious facts.

Designer job applicants are asked to design a landing page for a specific target audience.

Web programmers are asked to write a very simple web application based on a specification.

Although popular and good, this approach has a few drawbacks:

Manually reviewing candidate answers is time consuming, especially when you have 20+ candidates.

Reviewers are not objective, and have a strong bias to select candidates who think like them.

The best candidates don’t want to spend their whole evening working on a task. Experienced candidates often outright reject such tasks, as they feel their résumé already demonstrates their knowledge.

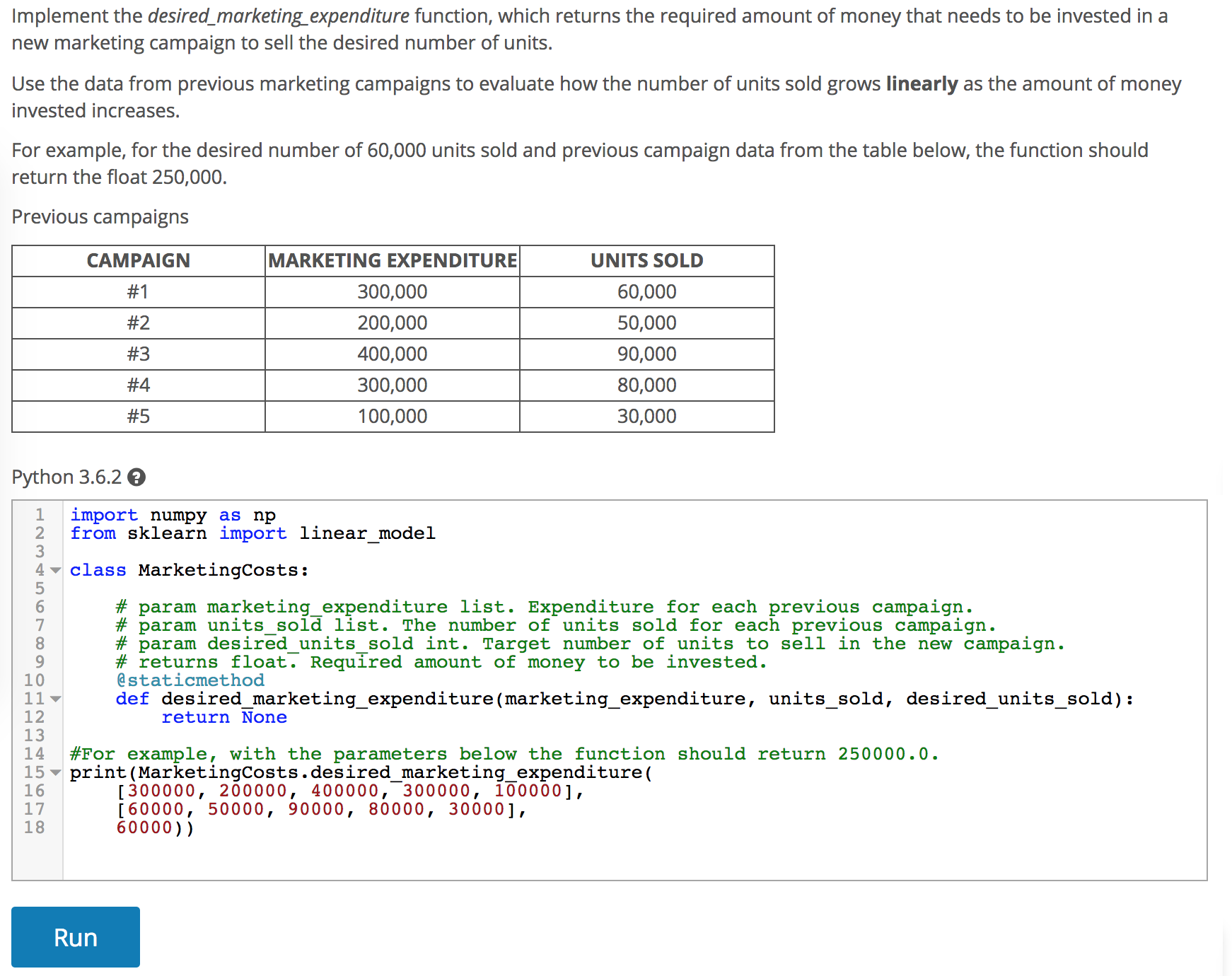

The solution to these problems is to break down what you’re testing into the shortest unit of representative work, and test just that. Here is an example of a creating-level question which screens data scientists, by asking them to create a single function in Python, see the next figure.

A good data scientist with Python experience can solve this question in less than 20 minutes. While there are a few ways to implement this function, which method the candidate chooses doesn’t matter as long as it works. We can just automatically check if the created function returns valid results.

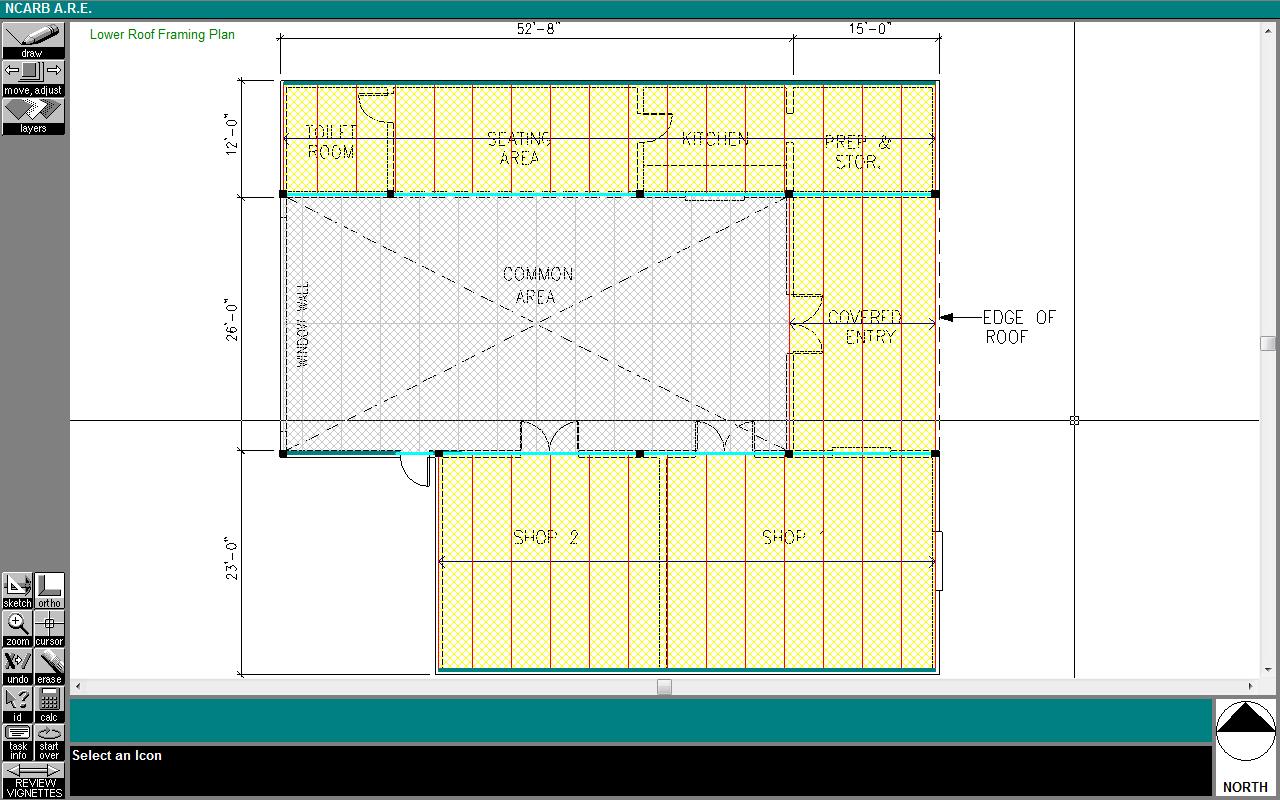

Most people think their profession is too complicated to be tested automatically. Don’t presume this. For example, architecture is a very complex profession, where there are an infinite number of solutions to an architectural problem. Yet, you will probably be surprised to learn that, since 1997, certification for architects in the United States and Canada is a completely automated computer test. The ARE 4.0 version of that test50 contains 555 multiple-choice questions and 11 “vignettes.” A vignette is actually a full-blown simulation program where the aspiring architect needs to solve a problem by drawing elements. For example, the Structural Systems Vignette from the next figure asks a candidate to complete the structural framing for a roof, using the materials listed in the program.

The candidate’s solution is automatically examined and scored, without human involvement. That makes the testing process transparent and equal for all. There is no chance for examiner bias, nepotism, or plain corruption in the examination process.

If architects in the United States and Canada are automatically evaluated using questions from the creating level since 1997, why is such testing an exception and not a rule for technically-oriented jobs 20 years later?

I don’t know, and I think we can do much better.

CHAPTER 6: The New Model in Detail

Now, we have all the building blocks we need. Let’s do a quick recap and then explain our new screening process step by step.

Everything We’ve Learned: Validity, Reward vs. Effort, Good Questions

We learned that not all methods of screening are equal, as they differ substantially in their predictive validity. High-validity methods are:

Job knowledge tests.

Peer rating (for in-company hiring).

General mental ability tests.

Structured employment interviews.

Work-sample tests.

Therefore, these are going to be the primary methods of our improved screening process.

To minimize possible bias, we will write down the requirements needed for the job and stick to them in every step of the process. We will avoid subjective judgements of “fit” and write every decision down, so that the process can be reviewed by other people. Because different jobs require different levels of knowledge, we will take into account Bloom’s hierarchy and write questions pitched at the job’s level.

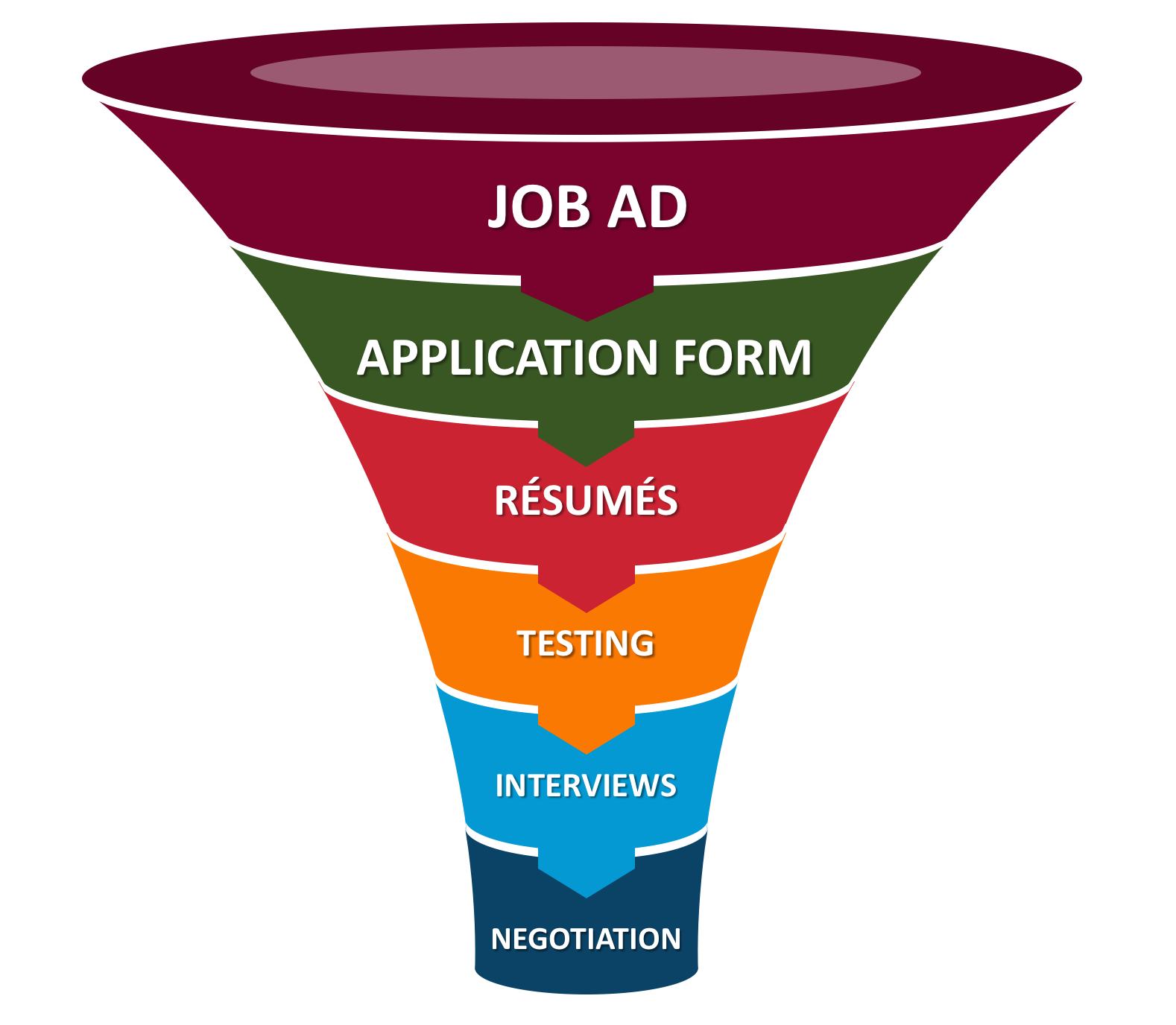

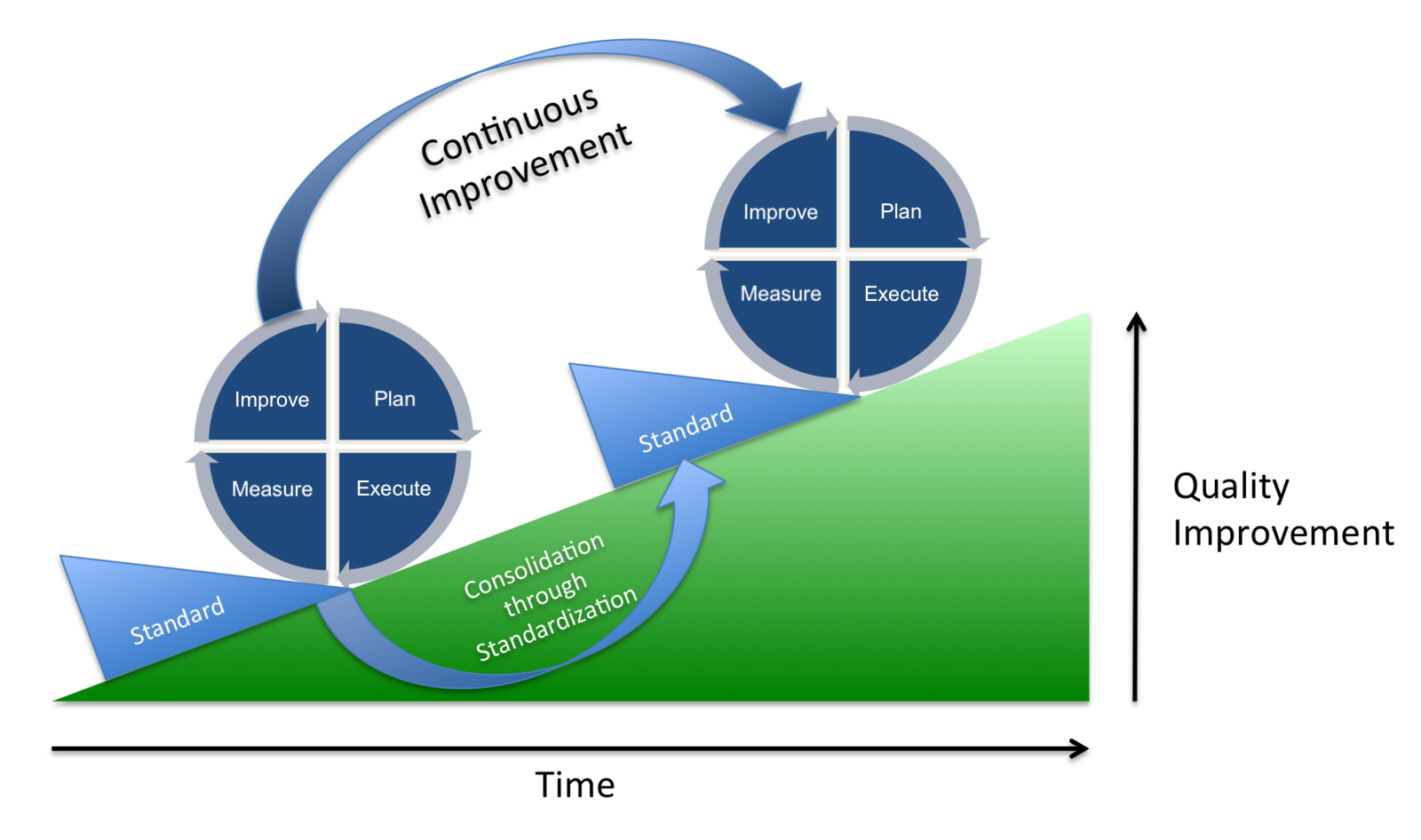

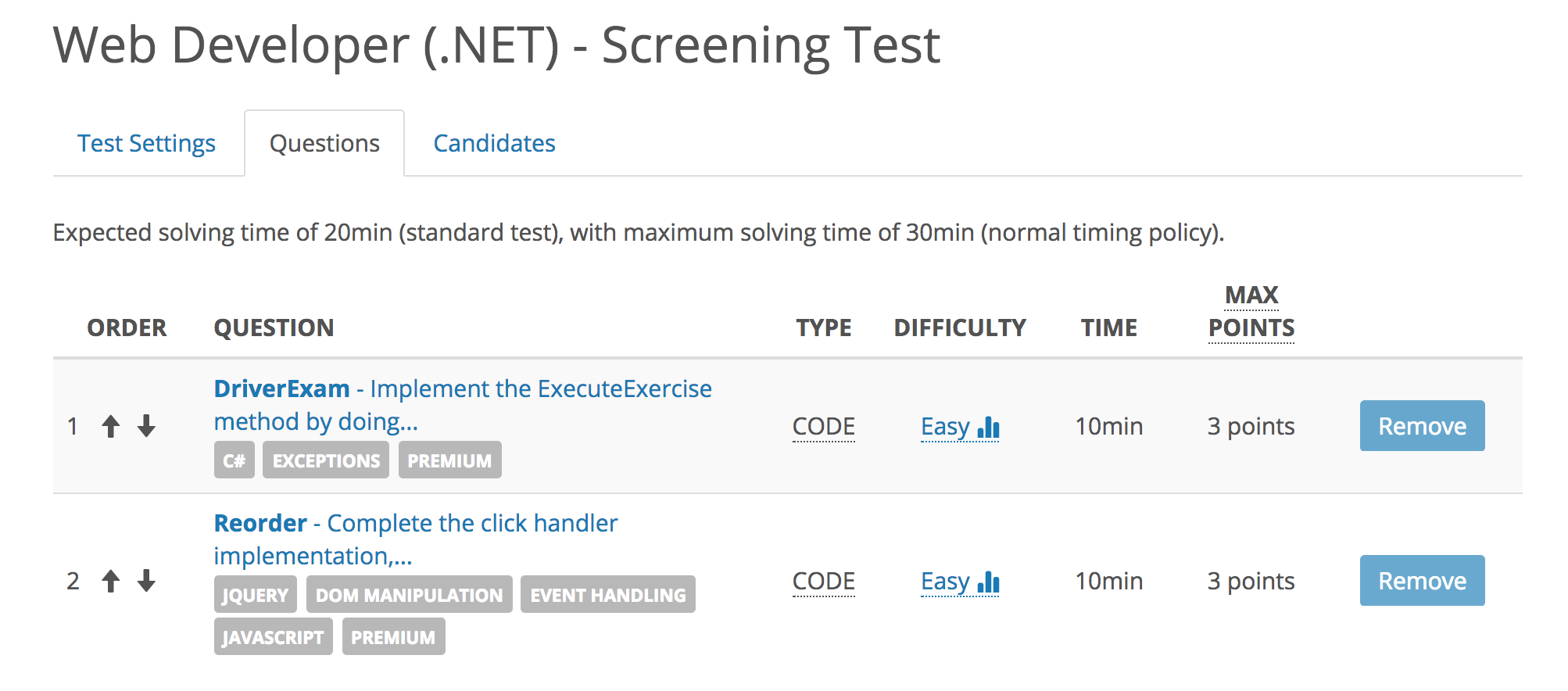

If the information gathered at each step doesn’t give us the criteria to exclude a candidate, it is not relevant for screening. All steps together form a funnel where the most effective methods start at the top of the funnel, see the next figure.

Note that the funnel diagram is not to scale, as each step is drawn just slightly smaller than the previous one. In reality, the number of candidates can be 5-10 times smaller for each step (e.g. 2,000 job ad views and only 200 applications). Automation can be used most effectively at the top of the funnel, when you have larger amounts of candidates being considered, to save the most time.

Ready? Excited? Let’s get started by looking at the first step in our new screening funnel: the Job Ad.

The Job Ad

Take a look at this sample job ad:

Rockstar Developer Wanted

Do you want to change the world? Join AirBabysitters and revolutionize the babysitting business. Millions of families have problems finding short-term babysitters, and millions of students would like to earn extra money. We connect them, giving parents time for themselves and giving students nice and flexible work, thus disrupting the on-demand childcare sector. Our platform is a web app, and you will be one of the rockstars developing it. It needs to be mobile friendly, rock-solid, and scalable to millions of users.

If you have technical expertise, join our awesome team. We are very friendly, informal, and have a flexible work schedule. Last but not least, we have a sweet downtown office with a ping-pong table, an in-house yoga teacher, and bulletproof coffee on tap!

If interested, send your résumé to our email: …

Do you think it does a good job of summarizing the company and vacancy? Let’s imagine a following story:

Joe, a young father of two, likes the ad. He is acutely aware of the problem of finding a babysitter whenever he wants to take his wife to dinner and a movie. He applies. His enthusiasm shows in the interviews, and his twelve years of experience don’t hurt either. He gets the job.

The problems begin immediately… although he knows everything connected to front-end development, the Airbabysitter’s back end is written in Java and he hasn’t used Java in years. Also, after the initial week, he finds he’s in charge of technical customer support, a job he has never liked. But, the biggest problem is the work schedule. His coworkers are mostly young, childless, and arrive at work after 10am. They stay until 7pm, or even later, drinking beer or playing ping-pong. Joe’s kids are in kindergarten, so he’s on a completely different rhythm. He’s in at 8am after dropping them off, and leaves work at 4pm to pick them up. This is a problem for him and his colleagues, as many technical issues appear later in the day. Even worse, every second weekend, they work overtime because of important deadlines. The last time they had weekend overtime, Joe refused to participate, arguing that he needs his weekends for his family. The founder who hired him gets angry. It turns out “flexible work schedule” meant the employee was supposed to be flexible, not the company. In the end, they agree on a mutual contract termination five months in. Joe’s furious. The startup helping young parents fired him–because he’s a young parent. The company founder is equally mad and shouts at HR for the bad job that they’re doing when screening employees for “cultural fit.”

Withholding relevant information in a job ad to look “more sexy” only hurts your company (and future hires) in the end. Your selection process doesn’t start with the first question in an interview, or the first time that you screen a résumé. It starts with the job ad.

If you take a look at the Rockstar Developer job ad again, it fails to mention many things that Joe and other candidates would find relevant:

Work schedule.

Expected salary range.

Overtime work.

Day-to-day tasks (e.g., technical support).

Required skills (e.g., back-end Java experience).

Whether the company offers stock options, remote work, or visa sponsorship.

And, while we’re at it, what do buzzwords like “rockstar developer” or “awesome team” even mean? The job ad asks, “Do you want to change the world?” Everybody wants to change the world, or, at least, their small corner of it, so there’s no filtering happening there.

Let’s rewrite that job ad to include the relevant information:

Full-time Web Developer (HTML/JS/Java) in a Startup

AirBabysitters is a platform that connects parents and casual babysitters. We already have thousands of users and we’re growing rapidly.

We are hiring a full-time web developer who will work on the following:

- Front end (HTML/CSS, Javascript).

- Back end (Java and PostgreSQL).

- Architecture of the entire platform.

- Technical customer support (25% of your time).

Our office is downtown and parking is in the same building. We are a team of 15, who are mostly younger, informal, and very diverse. Most of the people in the office work from 10am until 7pm, with a long lunch break. We often have beers or dinner after work. Because of deadlines, we sometimes work on weekends, but compensate with overtime pay or extra vacation days. You are expected to travel at least two times a year, to conferences in Las Vegas and New York.

The salary range is $70,000 to $90,000 per year, but we also offer 0.4% equity with a two-year vesting period. Salary depends on your level of experience and knowledge.

Minimum requirements are good knowledge of HTML/CSS, Javascript, Java, SQL, and three-years experience. We will screen candidates with an online test (60 minutes of your time). Selected candidates will be invited to a 30-minute Skype interview and 90-minute face-to-face interview at our office.

We need someone who can begin working next month. Unfortunately, we can’t offer visa sponsorship or remote work.

If interested, please apply using this form: …

If Joe had seen this ad, he would have never applied. He could easily see that the job requires Java and technical support, which he dislikes, and a late working schedule, which he can’t do. On the other hand, we can easily imagine the following person:

Emma found this job to be a perfect match. She is young and single, often going out and sleeping late into the morning. Hanging out with coworkers is really important to her. The salary is in her expected range, and she would even get equity. She doesn’t mind working some weekends, and she can use the extra vacation days for her next trip to Thailand.

While the second job ad is less sexy, it is much more specific, thus filtering more job seekers. Candidates are completely capable of screening themselves, at zero cost for you, if you give them all the relevant information. In hiring, being honest and transparent pays in the long run. The last thing that your company needs is a dissatisfied employee leaving after five months because you over-promised in the beginning.

If you don’t know what criteria to put in the job ad, think of the most common reasons why your candidates fail at interviews. Probably, you can add text to the job ad that would have deterred them from applying in the first place.

The Application Form

The job ad finished with instructions on how to apply. As a rookie employer, I would put this:

“If interested, send your résumé and cover letter to our email: …”

I learned the hard way that this is a mistake. My inbox instantly got filled with 30 résumés, two pages long on average, and 30 cover letters which were obviously copy/paste jobs from prepared templates. I was stuck with 70+ pages of tedious reading that wouldn’t give me the actual information that I needed to eliminate candidates. As I mentioned in Chapter 2: Proxies and Biases in Hiring, most people have a polished résumé, full of buzzwords and examples of how they’ve changed the world. Switching to an application form makes a huge difference.

Why?

Because, after asking biographical details, you can sneak in a few, quick, selective questions. You shouldn’t overdo it, because candidates tend to quit on longer application forms. In the case of my companies, 20 minutes is the maximum a candidate should spend applying, and we estimate that it takes them five minutes to fill in their name, email, LinkedIn profile, and upload a résumé. That leaves us with 15 minutes for quick screening questions. While the higher-level questions, like creating from Bloom’s hierarchy, are usually too long to be included, we can comfortably fit in three to five applying-level, multiple-choice questions. Every question that we include must meet two requirements:

The question must be basic enough that, if the applicant answers incorrectly, they will be eliminated from the process.

The question must be difficult enough that at least 20% of applicants will fail it.

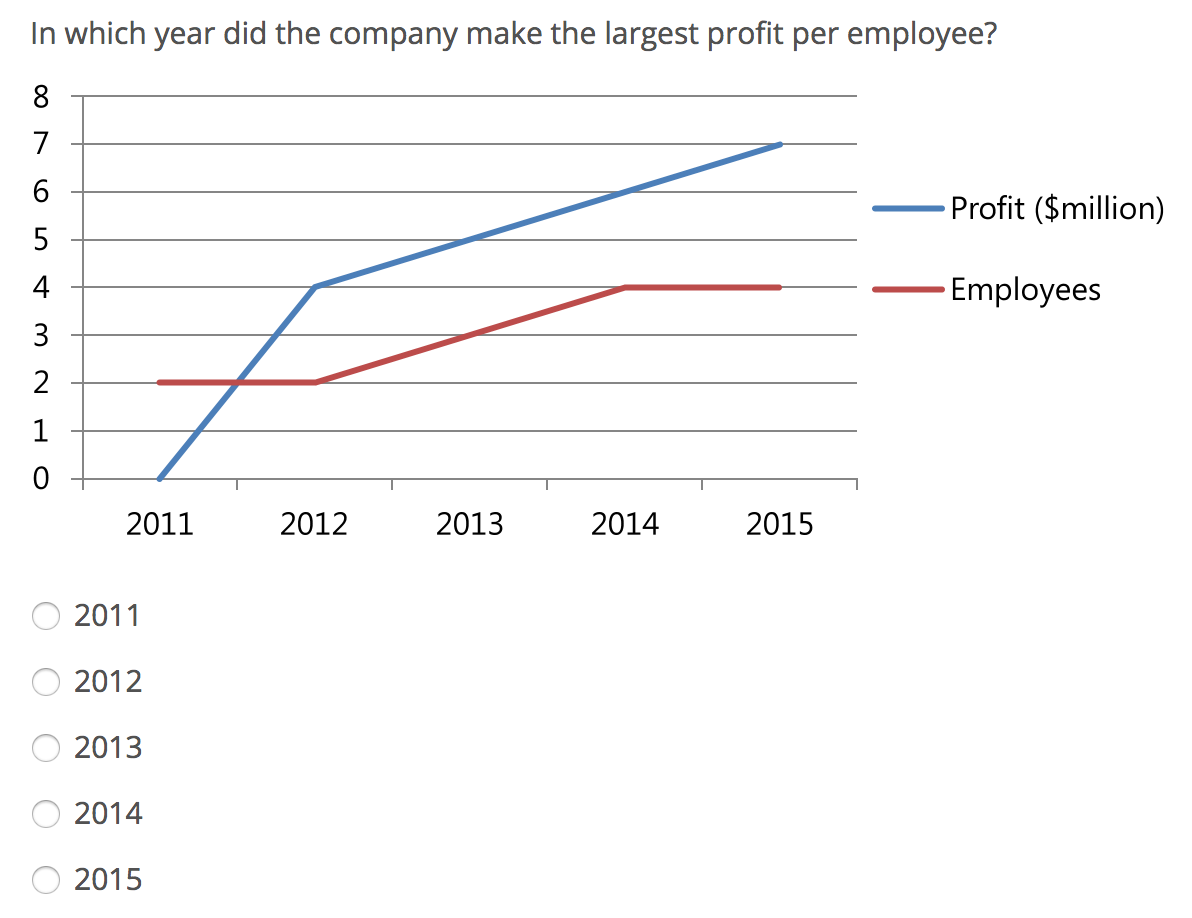

It might seem hard to find questions that are both basic and difficult. But, that’s not the case. For example, one of the really basic skills that we want from an employee working in a tech company is an ability to interpret a simple chart. The online three-minute question that checks just that is in the next figure.

To our great surprise, so many people failed this applying question that we have classified it as hard in our testing system. Don’t get me wrong, I don’t think candidates who fail this question are bad workers, but, between two candidates, I prefer to hire a person who doesn’t need help interpreting data.

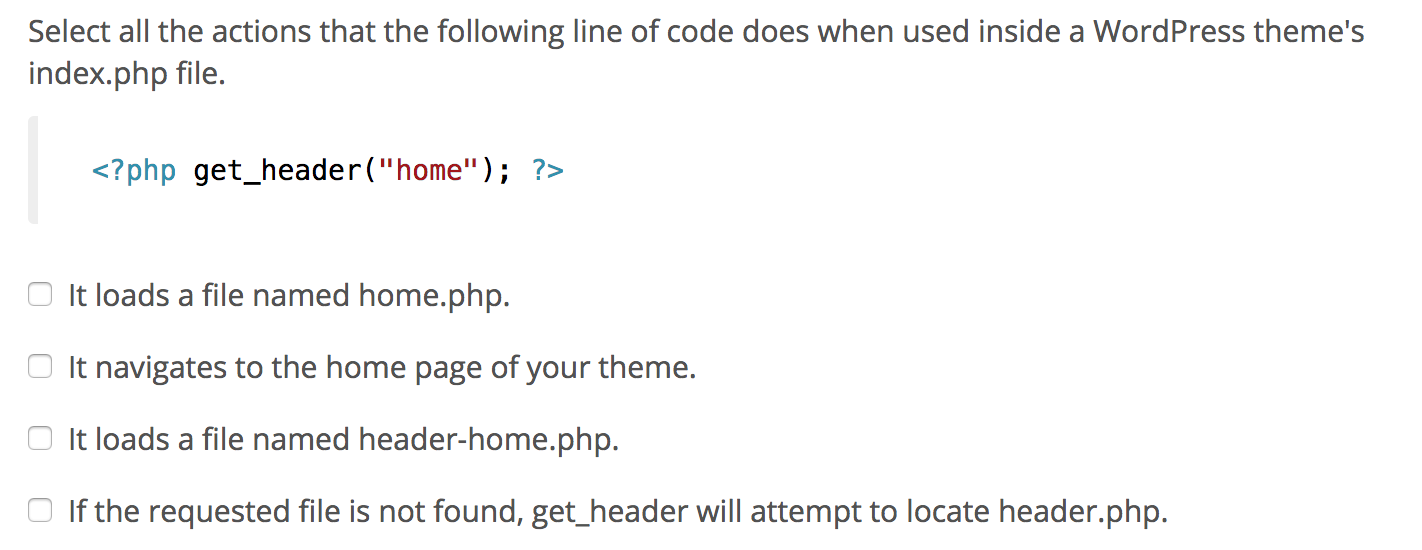

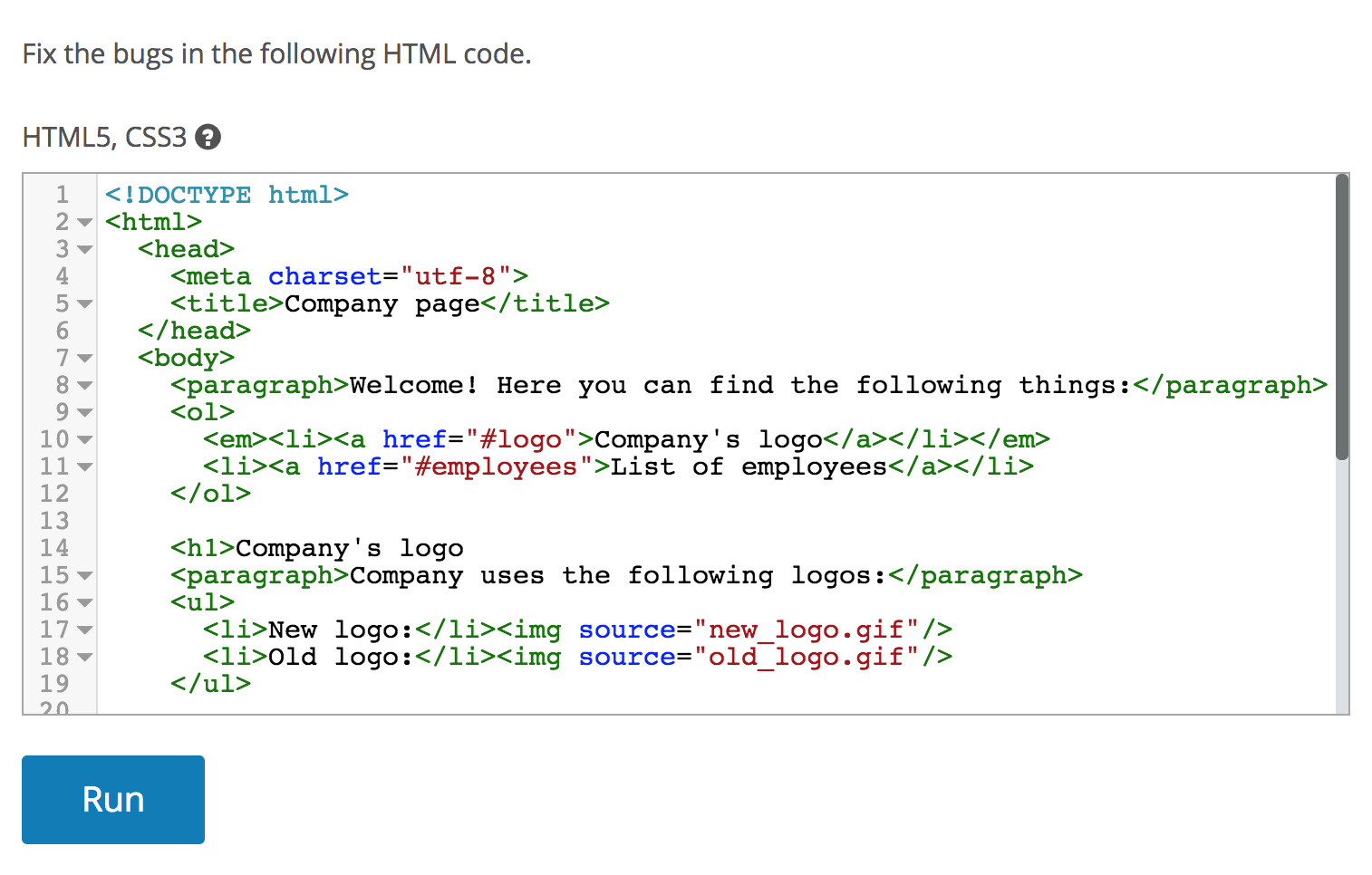

This question checked numerical reasoning, which is a part of general mental ability (which we know from earlier has a 0.51 predictive validity). The same system of short, demanding questions works in almost every domain of knowledge. For example, when hiring a WordPress expert, you could use online question from the next figure.

Our data shows that more than 70% of people claiming WordPress expertise can’t achieve a full score on this question, even if they have three minutes to google it in a separate tab. Given two candidates, I prefer to give a job to a candidate who has the expertise that they are claiming.

Again, as with the Job Ad, if you’re not sure what questions to ask in your application form, think of basic questions which candidates fail in your interviews.

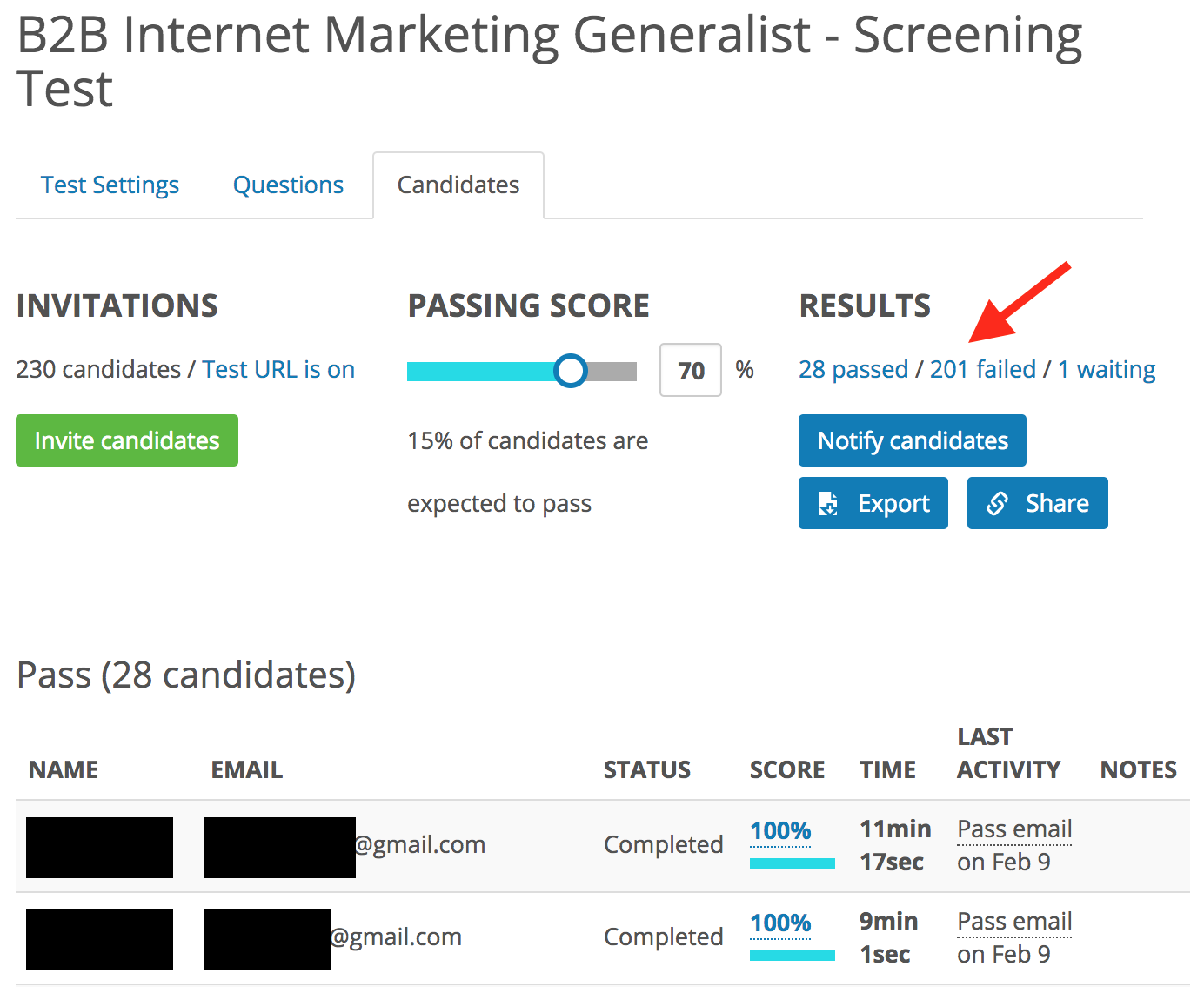

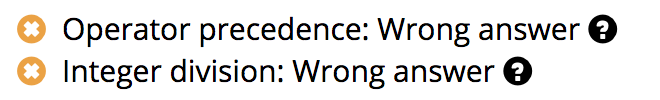

When you do it right, you get results as those in the application test in the next figure.

For this remote-working marketing position, we got 230 applications. But, after the application test, 201 candidates failed to reach the passing score of 70%. That was 201 résumés and covering letters that we didn’t have to read, allowing us to focus on just the top 28 candidates. It is also a great experience for these 28 candidates, because, they qualified for the next round in just ten minutes. And, they knew that they did well, since our testing system displays their score at the end of the test.

To conclude, short screening questions embedded within the application form are the second most effective screening method, after a properly written job ad.

Screening Résumés

The next step is reading résumés. Résumés should be read as what they are, a marketing brochure. We need to wade through the hyperbole haystack in search of the fact-needles that we require. To do so in a structured way, first, make a list of relevant requirements before reading any résumé. This will be easy, since these are already stated in the job ad. For example, if you are searching for a marketing manager, the requirements might be:

Has experience in marketing projects with a total spend of at least one million.

Has led, at least, a five person team for, at least, a year.

Average duration with each employer is more than a year.

It’s easier to uncover our biases when we create lists like this. Is it OK that we require experience with million-dollar projects? What if a candidate led a small team of three people for five years? Would that still qualify? You must decide in advance if you want the requirement to be a minimum criteria (pass or fail) or scoring criteria (e.g., from 1 to 10).

It is probably obvious that you shouldn’t give negative points for age, gender, or ethnicity, not only because it’s illegal but because it has no predictive validity. It’s perhaps less obvious, however, that you shouldn’t give positive points for the same things. This is called “positive discrimination” and some companies use it in an effort to increase diversification. Mathematically, giving two positive points to a certain ethnicity is equal to giving two negative points to all other ethnicities. It’s just negative discrimination in disguise.

While companies will have different résumé scoring procedures, they should all ask the same question: “Would we feel comfortable if our procedure was posted on the company notice board?” If the answer is no, then there’s something wrong.

After we have a fixed set of requirements, we can read résumés and search for the relevant information only. We assign a score to each candidate and they get a pass or a fail. This treats candidates more equally and the screening process takes only 2-3 minutes per candidate.

Communicating With Candidates

Imagine that you are a very talented candidate. You applied to a few companies and passed their initial tests. You know you are good. Why would you spend time progressing through multiple stages with companies that don’t get back to you for weeks?

Therefore, for most companies, the key communication begins after the application form and résumé screening. You’ve already screened most candidates. You can now inform all who have failed, thanking them for their time. Next, you need to motivate candidates who passed to invest effort in the upcoming stages. No big words or motivational speeches are needed here. You just need to let good candidates know that:

They passed the application form questions.

They passed the résumé screening.

You are thankful for their interest and invested time.

What the next steps in the screening process are going to be.

The name of the person who reviewed their application, together with that person’s contact details in case they have questions.

Having a real person address them by name shows both a personal touch and that someone cares about their application. The level of personal attention depends whether your company is hiring in an employer market (where unemployment is high) or a candidate market (where talent is scarce). In employer markets, email communication as a first step is fine. If you employ in Silicon Valley, however, email will probably not cut it. You will have to call or meet with candidates, explain why your company is good, how you can help their career, and make sure you have good reviews on GlassDoor.com.

Here are a few common questions which our candidates tend to ask at this stage, together with our answers:

Q: Is the salary range from the job ad fixed?

A: Yes, usually it is, otherwise we would put a different range.Q: Can I work part-time, instead of full-time?

A: Usually no, because otherwise we would have put part-time in the job ad.Q: How long will your process take, as I am also talking with another company?

A: We can hurry up your application, but the minimum time is X.

Whatever the answer is, give it directly and timely. It is very annoying for candidates not to receive a response for days or to get a convoluted answer. Your communication is a mirror of your company culture, so make it shine.

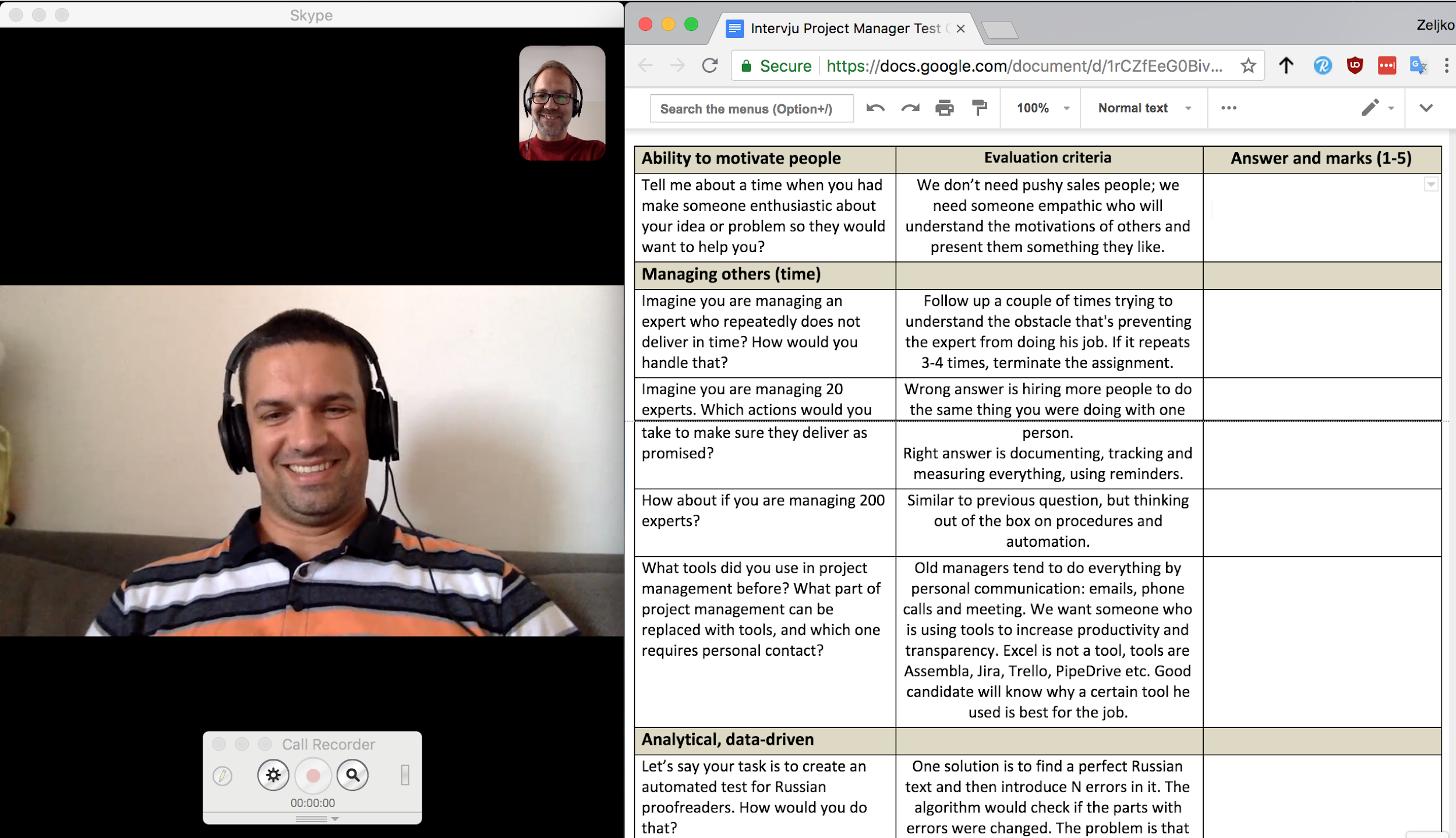

Detailed Screening Test